As a company that manages its own hardware and software division, Apple is known for its secretive ways it operates.

But according to a report from Forbes, the iPhone-maker does intercept and frequently checks for illegal material - mostly related to child sexual abuse.

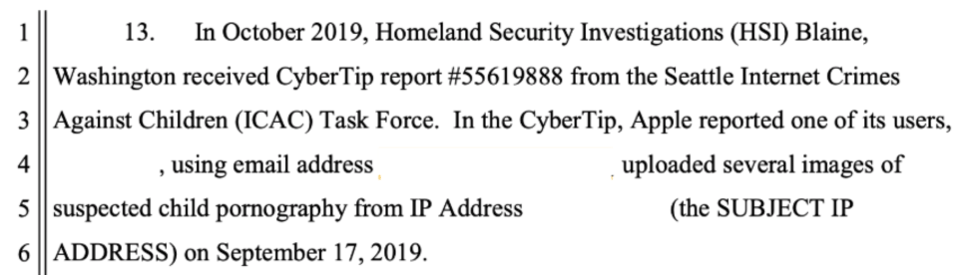

With the search warrant obtained by the media company, it was revealed that despite being uncooperative, Apple is still being helpful in investigations.

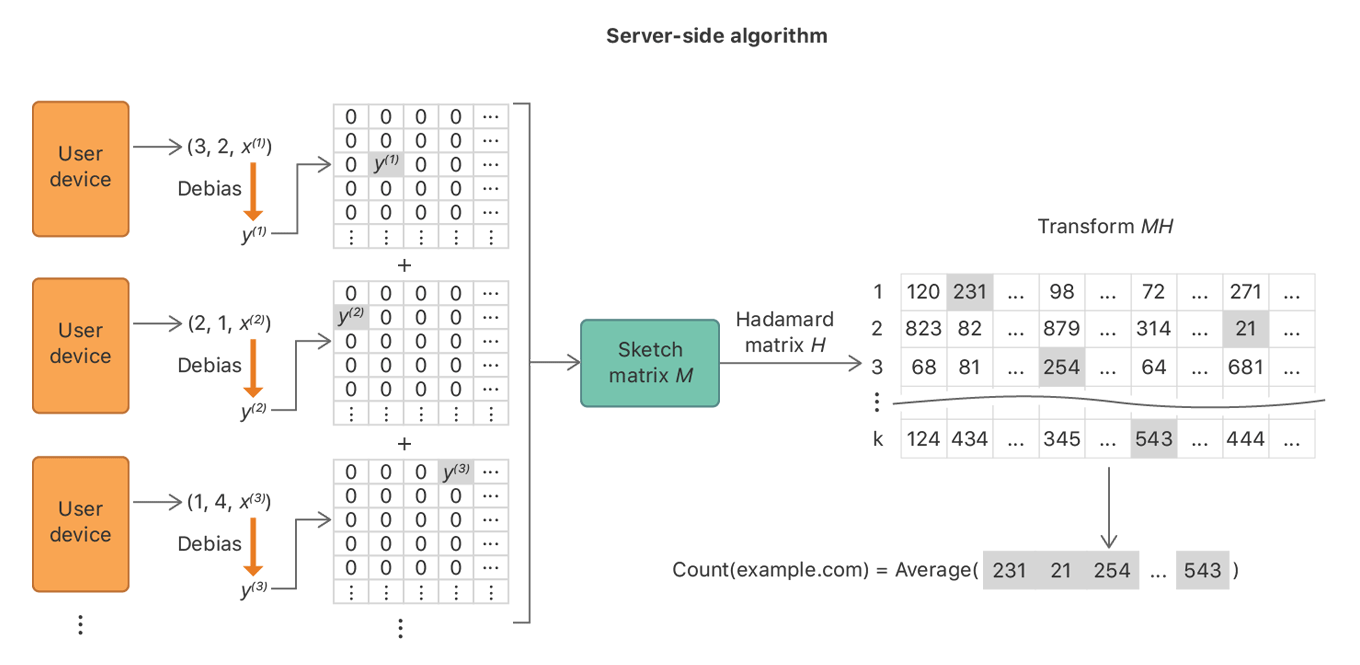

Apple does the intercepting of emails and their contents using what the industry calls 'hashes'.

This same method is used by most other giant tech companies like Google and Facebook to detect child abuse imagery.

Hashes are created as signatures, and attached to previously identified child abuse photos and videos. If any of those hashes pass through the servers, the owner of the servers will be notified.

What this means, instead of relying on humans, major tech companies rely on its servers and algorithms to 'see' and 'read' its users' emails. But when the system found a suspected email, the email or the file containing the potentially illegal images will be quarantined for further inspection by employees.

Once the threshold has been met, the companies will contact the relevant authority, typically the National Center for Missing and Exploited Children (NCMEC) in the U.S..

The nonprofit acts as the the U.S. law enforcement that deals with online child sexual exploitation.

Often, this will launch criminal investigations to the suspect(s).

But in Apple’s case, the company is said the be more helpful in investigations.

First, after the system flags a suspected child-abuse-related content in an email, the system will block and prevent that email from being sent. After the system's warning, its employee will look into the content, and analyzes any of the files present.

That’s according to a search warrant by Forbes, which published an Apple employee’s comments on how they first detected “several images of suspected child pornography” being uploaded by an iCloud user and then looked at their emails.

“When we intercept the email with suspected images, they do not go to the intended recipient. This individual . . . sent eight emails that we intercepted. [Seven] of those emails contained 12 images. All seven emails and images were the same, as was the recipient email address. The other email contained 4 images which were different than the 12 previously mentioned. The intended recipient was the same,” the Apple workers read.

“I suspect what happened was he was sending these images to himself and when they didn’t deliver, he sent them again repeatedly. Either that or he got word from the recipient that they did not get delivered.”

The Apple employee then examined each of these images of suspected child pornography, according to the special agent at the Homeland Security Investigations unit.

According to Forbes, no charges have been filed against that user, and Forbes has chosen to not publish his name nor the warrant.

And second, Apple has an advantage here because it can link all of users to the iCloud.

The data that Apple can provide to the authorities when necessary, include the user's name, address, and the phone number the user consented to submit when they first signed up. Because users' account is tied to iCloud, Apple can also provide the contents of the user’s emails, texts, instant messages and "all files and other records stored on iCloud."

As a company known for its secrecy, Apple has long been reluctant in showing off or explaining its methods.

Apple in using hashes is nothing new, as other major tech companies are also doing the same. The biggest advantage Apple has, is its ability to tie its users to their individual Apple account, and having them tied to the iCloud server.

"As part of this commitment, Apple uses image matching technology to help find and report child exploitation. Much like spam filters in email, our systems use electronic signatures to find suspected child exploitation. We validate each match with individual review," wrote an Apple disclaimer in its website.

However, when it comes to privacy, things can be a little different.

No matter how much automation is used by Apple, and the end of the line, humans are then ones doing the final check. As long as Apple employees are the only people looking into emails when abusive images are detected by its systems, and never have that information leaked outside its headquarters, there shouldn’t be much of a privacy issue here.

"We develop a system architecture that enables learning at scale by leveraging local differential privacy, combined with existing privacy best practices," committed Apple on its web page.

Apple is just like all tech companies, as it has to balance its users' privacy with safety. And here, Apple is showing that it is doing a good job for now.