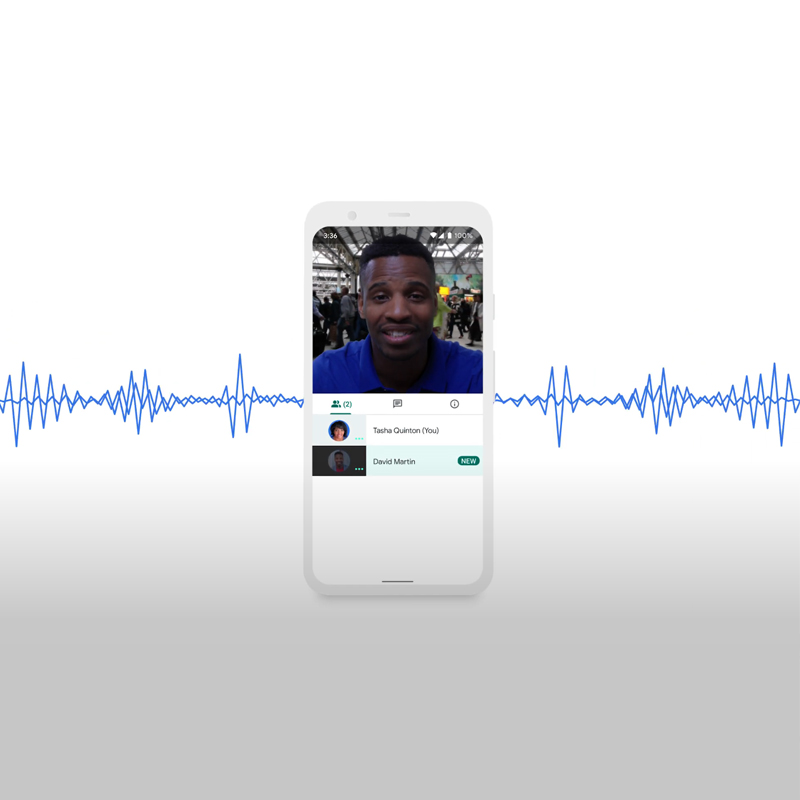

With more people relying on video conference tools for work to school-related tasks, Google is turning on its AI-powered noise cancellation in Google Meet.

This particular feature uses supervised AI learning, which leverages machine-learning model trained on a labeled data set. In a gradual rollout, Google is unleashing the AI-powered noise cancellation to Google Meet for the web, before launching it on Android and iOS.

It began back in April 2020, when Google announced that Meet users on G Suite Enterprise and G Suite Enterprise for Education would have a noise cancellation feature “to help limit interruptions to your meeting."

This happened because noise cancellation was one of the 4 top-requested Google Meet features.

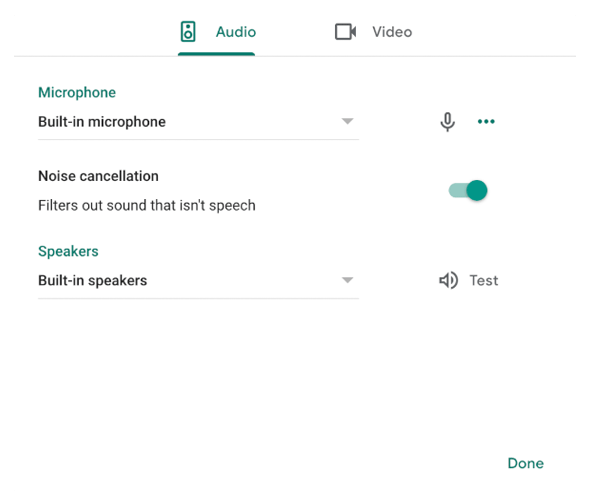

What the company called the “denoiser,” the feature is turned on by default, with users having the ability to turn it off from Google Meet’s settings.

This AI-powered noise cancellation feature started when Google acquired Lime Audio in 2017. With the acquisition, "we got some amazing audio experts into our Stockholm office,” said Serge Lachapelle, G Suite director of product management.

The original noise for creating noise cancellation was born out of annoyances of users while conducting meetings across time zones.

“It started off as a project from our conference rooms,” Lachapelle said. “I’m based out of Stockholm. When we meet with the U.S., it’s usually around this time [morning in the U.S., evening in Europe]. You’ll hear a lot of cling, cling, cling and weird little noises of people eating their breakfast or eating their dinners or taking late meetings at home and kids screaming and all. It was really that that triggered off this project about a year and a half ago.”

To create this noise cancellation feature, the team at Google needed to build AI models and address latency.

“It had never been done,” Lachapelle said. “At first, we thought we would require hardware for this, dedicated machine learning hardware chips. It was a very small project. Like how we do things at Google is usually things start very small. I venture a guess to say this started in the fall of 2018. It probably took a month or two or three to build a compelling prototype.”

“And then you get the team excited around it,” he continued.

“Then you get your leadership excited around it. Then you get it funded to start exploring this more in depth. And then you start bringing it into a product phase. Since a lot of this has never been done, it can take a year to get things rolled out. We started rolling it out to the company more broadly, I would say around December, January. When people started working at home, at Google, the use of it increased a lot. And then we got a good confirmation that ‘Wow, we’ve got something here. Let’s go.'”

Similar to how AIs are taught for speech recognition, Google in training its noise cancellation feature included the team in figuring out how to train the AI to understand what is speech and what is not.

This is because the AI needs to know the exact difference between noise and speech, to be able to differentiate which is which in real time, and cancel what is unwanted.

At first, the team used thousands of its own meetings to train the model, as well as audio from YouTube videos “wherever there’s a lot of people talking. So either groups in the same room or back and forth,” explained Lachapelle.

“The algorithm was trained using a mixed data set featuring noise and clean speech. Other Google employees, including from the Google Brain team and the Google Research team, also contributed, though not with audio from their meetings. The algorithm was not trained on internal recordings, but instead employees submitted feedback extensively about their experiences, which allowed the team to optimize. It is important to say that this project stands on the shoulders of giants. Speech recognition and enhancement has been heavily invested in at Google over the years and much of this work has been reused.”

For what is worth, the AI is splendid in removing noises, despite still missing some abilities.

For example, it works well on removing sounds of doors slamming, dogs barking, kids fighting, applause, sounds from construction workers, sounds from the kitchen, vacuum cleaners, sounds from moving objects on a table, like clicking of a pen and some others.

But for certain other noises, and voices, the AI seems to experience some troubles.

For example, a human voice has such a large range, and it's almost impossible to train the AI to understand that wide possibilities from all of its users. The denoiser can, and cannot cancel out voices that are blurry, and can have a hard time dealing with sounds of people screaming.

The team hopes that the AI can get better as it learns from more data.

Besides user experience issues, there is another issue: speed.

Google did consider using the edge, which is putting the machine-learning model on users' device, like in the Google Meet app for Android and iOS. But since this would prevent Google in delivering consistent experience across devices, Google ditched the idea.

For this reason, Google decided to use the cloud. This way, users should have the exact same denoised meeting experience on every device.

This also ensures that the process is as light as possible on clients. And because the process happens in the cloud, users won’t have to update anything either, not even the Google Meet app, to benefit the noise cancellation feature.

The drawback of this, is latency.

With Google sending huge chunks of data to its servers, the AI which works on Google' servers need to optimize them, to then send the data back to the users.

Latency is something Google needs to deal with. And here, the team tried to optimize things to prioritize speed.

“We can’t introduce features that slow things down. And so I would say that just optimizing the code so that it becomes as fast as possible is probably more than half of the work. More than creating the model, more than the whole machine learning part. It’s just like optimize, optimize, optimize. That’s been the hardest hurdle,” explained Lachapelle.

After dealing with latency, there is also the cost issue.

With Google needing to deal with a lot of data, the company needed to add extra processing step for every single attendee in every single meeting hosted in Google Cloud.

“There’s a cost associated with it,” Lachapelle acknowledged.

“Absolutely. But in our modeling, we felt that this just moves the needle so much that this is something we need to do. And it’s a feature that we will be bringing at first to our paying G Suite customers. As we see how much it’s being used and we continue to improve it, hopefully we’ll be able to bring it to a larger and larger group of users.”

Google in improving Google Meet with AI-powered noise cancellation comes as video conference tools experience massive surge of users as the coronavirus forces millions of people to work and study from home.

Google here is trying to compete with Zoom, which saw its daily meeting participants soar from 10 million to over 200 million in three months alone.

Read: How Google Meet And Google Duo Confront The Almighty Zoom