It's safe to say that practically everyone knows how to click-and-drag things on computers. And DragGAN utilizes that.

AI continues to wow more people, as new products powered by smarter models can accomplish what was previously impossible, or not thinkable. And here, DragGAN is like an Adobe Photoshop, but with an AI that has gone next level with click-and-drag.

Using click-and-drag, users can use the tool to have the AI follow their input, but also stay on the manifold of realistic images.

The method that leverages a pre-trained GAN to synthesize images, DragGAN is essentially an interactive approach for intuitive point-based image editing.

Created by a group of researchers from Google, alongside the Max Planck Institute of Informatics and MIT CSAIL, DragGAN relies on a general framework, and not only the more traditional domain-specific modelling or auxiliary networks.

To create this AI, the researchers used an optimization of latent codes that incrementally moves multiple handle points towards their target locations, alongside a point tracking procedure to trace the trajectory of the handle points.

Initially, DragGAN is introduced only on a research paper (PDF).

"Existing approaches gain controllability of generative adversarial networks (GANs) via manually annotated training data or a prior 3D model, which often lack flexibility, precision, and generality."

"In this work, we study a powerful yet much less explored way of controlling GANs, that is, to 'drag' any points of the image to precisely reach target points in a user-interactive manner [...] "

And to explain the tool, the researchers on their web page said that the tool is able to synthesize visual content that meets users' needs using flexibility and precise controllability.

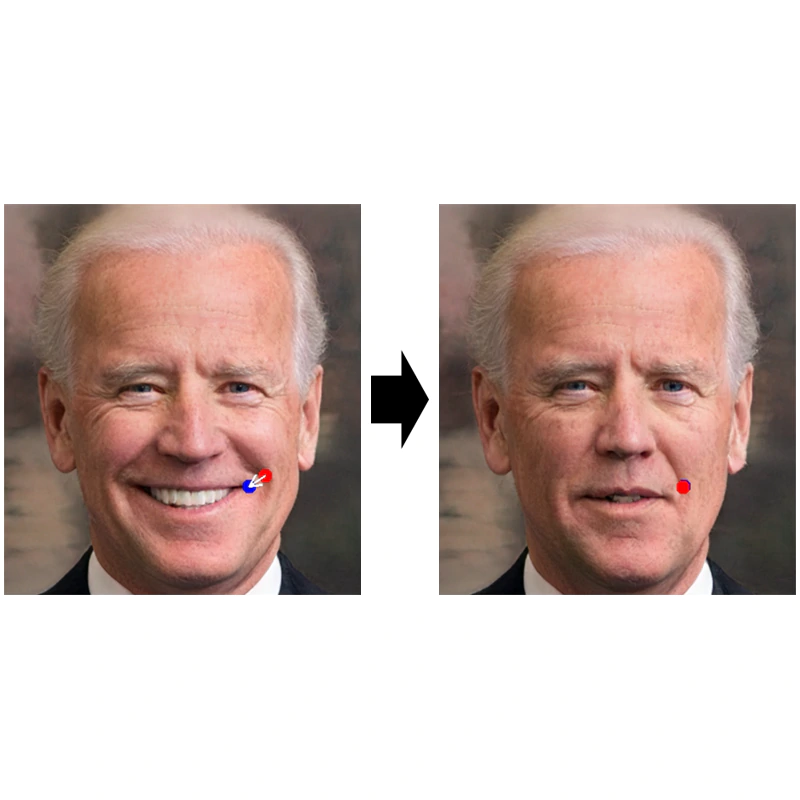

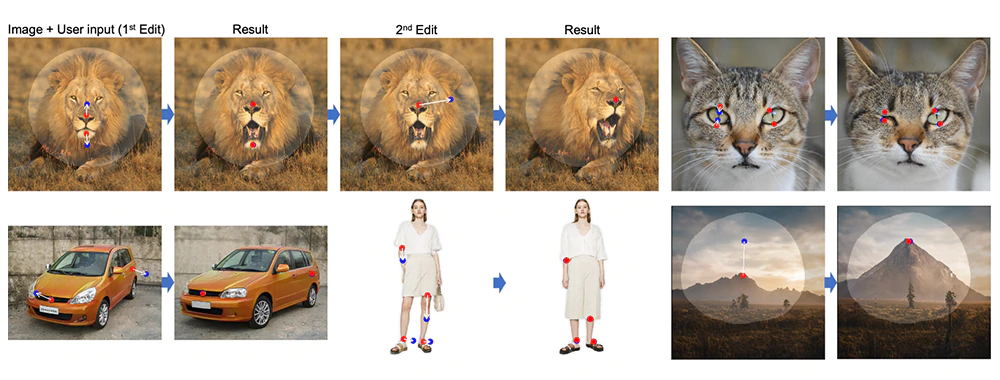

Because of the AI it uses, DragGAN is able change the pose, shape, expression, and layout of generated objects.

Previously, controllability of GAN is achieved via manually annotated training data or a prior 3D model, which according the researchers, "often lack flexibility, precision, and generality."

With DragGAN, "we study a powerful yet much less explored way of controlling GANs."

With the tool, not only users can change the dimensions of a car or manipulate a smile into a frown with a simple click and drag, but they can rotate a picture’s subject as if it were a 3D model - changing the direction someone is facing, for example.

The research team noted in its paper that the AI can even add new details within the regeneration of the edited aspects of images that are beneficial to the update.

"Our approach can hallucinate occluded content, like the teeth inside a lion’s mouth, and can deform following the object’s rigidity, like the bending of a horse leg."

One demo even shows the user adjusting the reflections on a lake and height of a mountain range with a few clicks.

Using this method, DragGAN can yield pixel-precise image deformations with interactive performance.

And as future AI products become smarter, the tools to manipulate images will continue to grow.

In the world where generative AI products have taken the world by storm, thanks to diffusion models like DALL·E 2, Imagen, Stable Diffusion, and Midjourney

But this GAN is different, and may be more impactful than image generators.

This is because DragGAN allows users to edit an already-generated images to users' specification, and not creating new ones that may serve for malicious purposes.