AI chatbots are used in many places. From messaging apps, to individual organizations' apps and websites' chat boxes.

The biggest problem with these AI bots is that, even the best of their kind and the most advanced ones can't hold a decent conversation. In short, while they are fast and reliable for quick fires of questions, they are still dumb as a rock for the most part.

Researchers and developers are trying to fix this issue by making systems better at generating written words.

One of which, is the TalkToTransformer.com website that was created by Canadian machine learning engineer Adam King.

King made the site to showcase the underlying technology that uses an AI language system, called 'GPT-2', that comes from research lab OpenAI. With the website, King made a trimmed and slimmed-down version of the GPT-2, allowing visitors of his site to access the technology, which at the time, was only made accessible to selected scientists and journalists.

Read: The 'GPT-2' AI Could Create Significant Societal Impacts OpenAI Is Scared Of

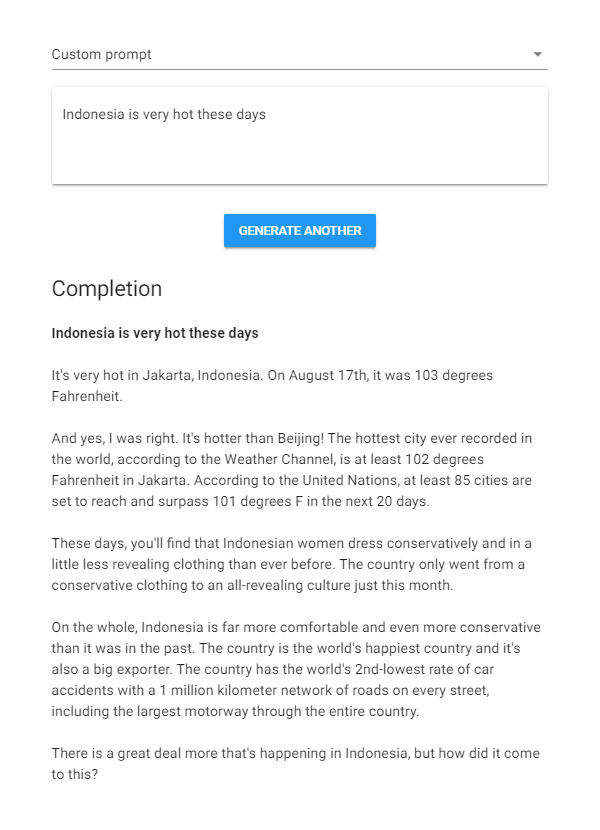

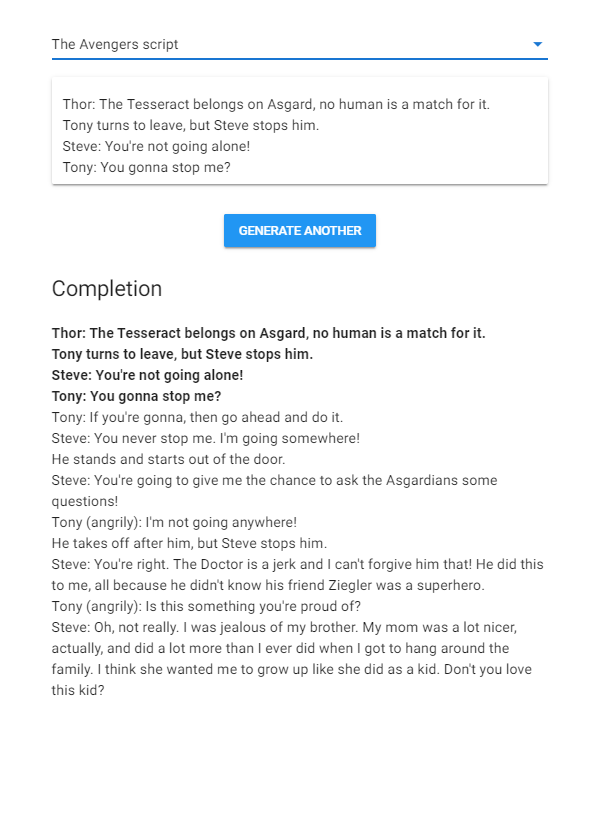

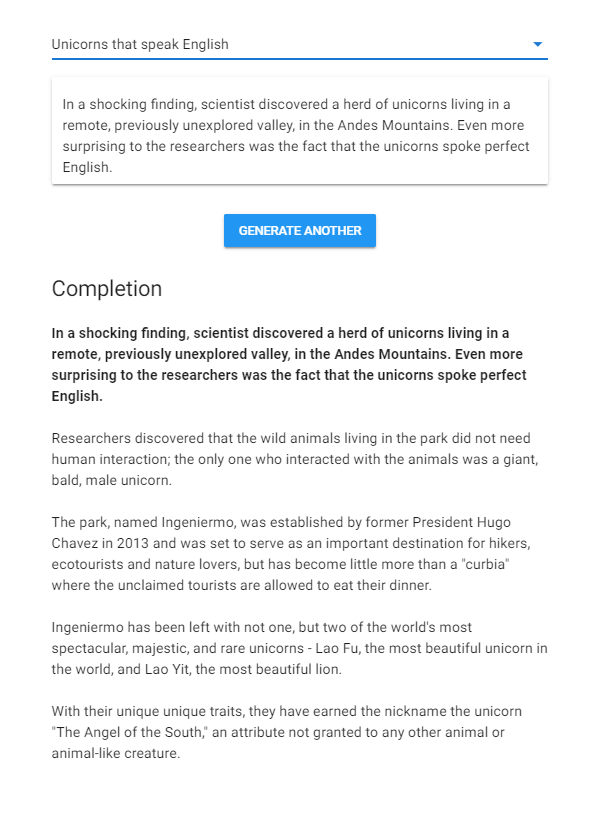

The 'Talk to Transformer' website has a simple design with a prominent text field that shows "how a modern neural network completes your text."

What visitors need to do here, is just type something, to then see the neural network guessing what comes next.

"While GPT-2 was only trained to predict the next word in a text, it surprisingly learned basic competence in some tasks like translating between languages and answering questions. That's without ever being told that it would be evaluated on those tasks," explained King on the page.

The GPT-2 OpenAI introduced was initially a small and medium-sized versions of its full version.

As for King's website, it's run on the medium-sized model OpenAI introduced on May 3rd, called the '345M' for the 345 million parameters it uses.

On the plus side, the model is incredibly flexible.

King's website shows how the GPT-2 can recognize a huge variety of inputs, from news articles and stories to song lyrics, poems, recipes, code, and even HTML codes. the AI can also identify familiar characters from franchises like Avengers and The Lord of the Rings.

But still, the AI has limits.

When talking to the AI, soon visitors can see the limit of what it can do. Like for example, the system doesn't language or the world at large. Then there is the text it generates, which has surface-level coherence but no long-term structure.

And when writing stories, the AI somehow create characters that appear and disappear at random, with no consistency in their needs or actions. This also happens when it generates dialogue, as the AI creates conversations but later drifts them aimlessly from topic to topic.

GPT-2 was created from a single algorithm that has learned to generate text by studying a huge dataset scraped from the web and other sources.

It learned by looking for patterns in this information, and the result is a supposed to be a multitalented system. While it's still dumb in one way or another, but it's a progress for the better.