Not only people love to sing and play instruments, many people also like to hum.

Humming is like singing a song, or producing a wordless tone with the lips closed, without having to utter any speech sounds distinctly. People often do this while reading, sipping a cup of coffee, showering or doing practically anything else.

Humming is also used in some music genres, like classical, to jazz and even R&B.

This activity is very common among humans, that Google wants to take a little experiment using AI.

Using what it calls 'Tone Transfer', Google has created a machine learning technology that can turn any unpolished melody into sounds generated from musical instruments. For example, it allows users to turn their hums into a violin tune.

All users need to do, is go to its official website using a computer or an Android phone, and choose the "Let's play!" option. Or, they can select the "Watch short video" option to see how it works.

If users choose the former, they will be presented with different inputs, including acapella (singing), birds (chirping), Carnatic (singing), Cello (performing), pots and pans (clanging), or synthesizer (riffing).

These options are already prepared, which users can quickly transform into different tunes.

To use users' own voice, users need to click on the "Add your own" option.

This allows them to record any sound they create for 15 seconds.

Users can use their own voice, make a sound from tapping, or even play an actual instrument.

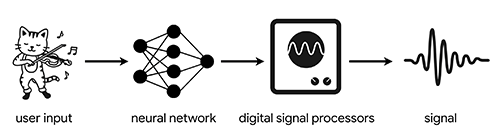

After that, Google's machine learning algorithms will listen to the sound, and attempts to convert it into digital sound, transforming it into any of the available instrument tunes.

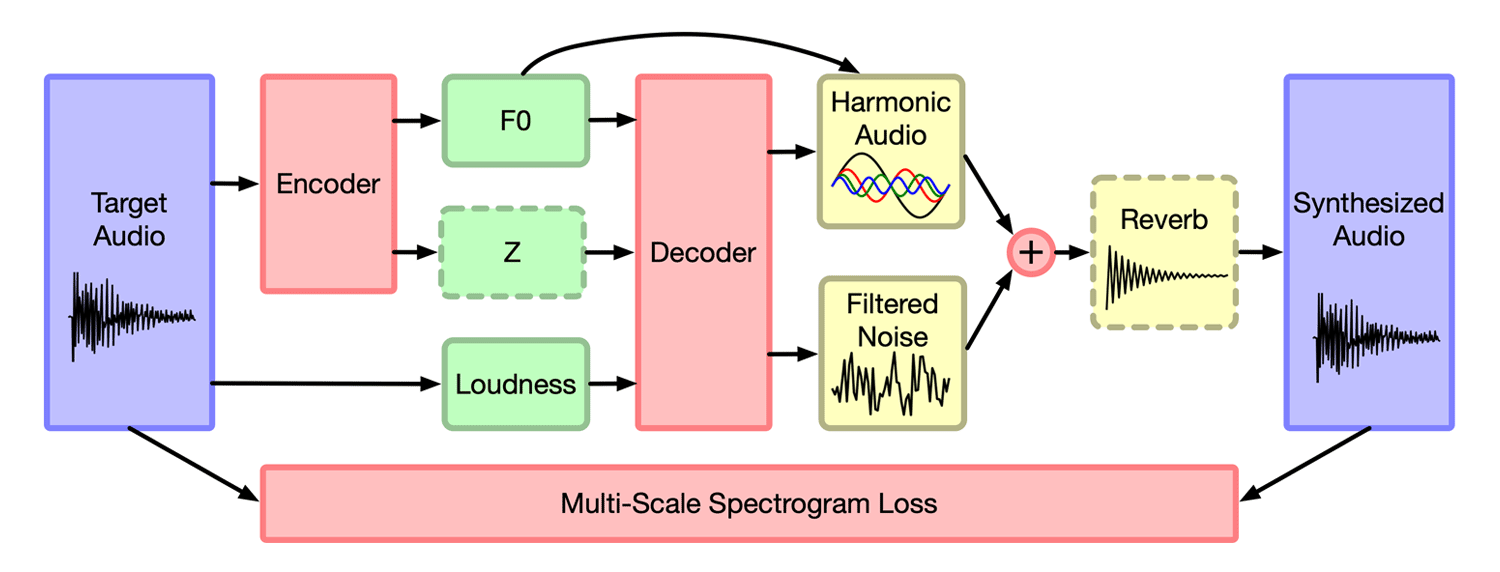

To make this happen, the AI uses the Differentiable Digital Signal Processing (DDSP) library created by Google's Magenta AI, which focuses on developing open-source technologies to explore the use of machine learning in art.

As a project exploring the role of machine learning as a tool in the creative process, it is based on the Python library, powered by TensorFlow. Its library includes utilities for manipulating source data (primarily music and images), using this data to train machine learning models, and finally generating new content from these models.

As explained in Magenta's blog post, if users control certain parameters of Digital Signal Processors (DSP), they will be able to produce natural sounds of instruments.

With DDSP, Google’s team in a ICLR 2020 paper wrote that the method allows them to train audio synthesis models with fewer parameters and less data.

"While sufficient to express any signal, these representations are inefficient, as they do not utilize existing knowledge of how sound is generated and perceived. A third approach (vocoders/synthesizers) successfully incorporates strong domain knowledge of signal processing and perception, but has been less actively researched due to limited expressivity and difficulty integrating with modern auto-differentiation-based machine learning methods."

"Focusing on audio synthesis, we achieve high-fidelity generation without the need for large autoregressive models or adversarial losses, demonstrating that DDSP enables utilizing strong inductive biases without losing the expressive power of neural networks."

These models help tools such as Tone Transfer create high-quality audio from user input. With that in mind, Google's Tone Transfer can use a neural network to change users' audio input into DSP, to then convert it into many different instrument sounds.

Tone Tranfer showcases what people can do with AI.

And with the tool, Google is also showing how a mindless humming people do when they're doing something, could be turned into an instrumental solo using algorithm.

According to Google research scientist Hanoi Hantrakul, he describes this technology as deconstructing sound into “Play-Doh”, which can then be moulded into something else.

“We do this by training a machine learning model to distill what makes an instrument sound like that particular instrument,” Hanoi explained.

“You can sing into it, and you bang on some pots, and you have a cat meow in the background,” he continues.

“That’s a lot of inspiration for the model to work with, and I feel like that's where the magic of Tone Transfer happens.”

It should be noted that the quality of Tone Transfer's output is highly dependent on the input.

If, for example, users hum while there are too many background noises, or if using a less-capable microphone, the output quality may not be that good.

To get a good recording, users may want to take a few tries before getting the output they want.