AI is smart, and can be trained to be a lot smarter in a particular task than humans. And there are ways to test that fact.

But somehow, AI can still fail in some of the basic things. For example, using a Turing test, machines can be tested to show whether or not it exhibits intelligent behavior equivalent to, or indistinguishable from, that of a human. The method determinies whether or not a computer is capable of thinking like a human being.

One of the ways to simulate this, is by using a game called the 'Game of Life'.

Or simply known as 'Life', it was created by British mathematician John Horton Conway in 1970. It is simply a zero-player game, meaning that the result is determined by its initial state, requiring no more than one input.

The grid-based automaton that is very popular in discussions about science, computation, and artificial intelligence. The game can be used to test AI because it has very simple rules, but can create complex answers.

And Life is a challenge on its own for AI, as researchers from Swarthmore College and the Los Alamos National Laboratory have shown in a paper titled, “It’s Hard for Neural Networks To Learn the Game of Life".

Game of Life is about tracking an on or off state. Called the life, it's a series of cells on a grid across timestamps. After a player creates the initial step, the following timestamps will follow the following rules:

- If a live cell has less than two live neighbors, it dies of underpopulation.

- If a live cell has more than three live neighbors, it dies of overpopulation.

- If a live cell has exactly two or three live neighbors, it survives.

- If a dead cell has three live neighbors, it will come to life.

Based on these four simple rules, the player can adjust the initial state of the grid to create stable, oscillating, and gliding patterns.

If the player has creates the first initial state correctly, the cells will continue to live and commence throughout the timestamps. But it the initial state is wrong, the cells will die eventually and nothing will survive.

The thing about this game is that, no matter how complex the grid will become, humans can predict the state of each cell in the next timestamp by following the same rules. Neural networks on the other hand, despite being extremely good in predictions, can have difficulties in playing the game.

“We already know a solution,” Jacob Springer, a computer science student at Swarthmore College and co-author of the paper, told TechTalks.

“We can write down by hand a neural network that implements the Game of Life, and therefore we can compare the learned solutions to our hand-crafted one. This is not the case in.”

Game of Life is unlike testing AIs on domains like computer vision or natural language processing, which can have subjective answers or ambiguity. But if an AI is capable of learning the rules of the game, it will reach a 100% accuracy.

This is why the grid-based game Game of Life is popular among researchers.

“There’s no ambiguity. If the network fails even once, then it is has not correctly learned the rules,” Springer says.

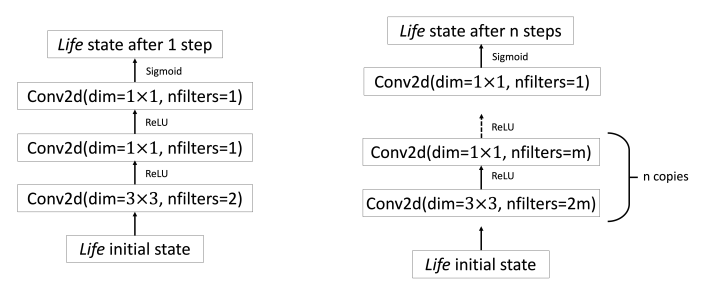

In their work, the researchers found that a purposely developed AI for this game is able to predict the sequence in the Game of Life grid cells, only if the AI has been converge using the hand-crafted parameter values.

But in most cases, the trained AI cannot not find the optimal solution, and the performance of the network decreased even further as the number of steps increased. The researchers found that the result of training the neural network was largely affected by the chosen set training examples as well as the initial parameters.

“For many problems, you don’t have a lot of choice in dataset; you get the data that you can collect, so if there is a problem with your dataset, you may have trouble training the neural network,” Springer says.

The conclusion of this test, the researchers found that huge deep learning model might not be the most optimal architecture to address a problem, but it has a greater chance of finding a good solution.

But this requires a lot of resources.

Training one complex AI requires greater energy consumption and carbon emissions caused from the compute resources. What's more, it may not be feasible in domains where data is subject to ethical considerations and privacy laws.

On the other side of the equation, this test proves that there is likely to be a smaller deep learning model that can provide the same or better results, by finding the ideal distribution strategies across smaller datasets. But only if researchers can find it.

“Given the difficulty that we have found for small neural networks to learn the Game of Life, which can be expressed with relatively simple symbolic rules, I would expect that most sophisticated symbol manipulation would be even more difficult for neural networks to learn, and would require even larger neural networks,” Springer said.

“Our result does not necessarily suggest that neural networks cannot learn and execute symbolic rules to make decisions, however, it suggests that these types of systems may be very difficult to learn, especially as the complexity of the problem increases.”

“We hope that this paper will promote research into the limitations of neural networks so that we can better understand the flaws that necessitate overcomplete networks for learning. We hope that our result will drive development into better learning algorithms that do not face the drawbacks of gradient-based learning,” the authors of the paper write.

“I think the results certainly motivate research into improved search algorithms, or for methods to improve the efficiency of large networks,” Springer said.