Becoming invisible is a superpower only found in comic books and science fictions. Humanity however, is getting closer.

That, at least against detectors and face recognition AIs. This happens when researchers from the University of Maryland managed to create a real-life “invisibility cloak” to trick AI-powered cameras. The technology essentially stops AIs on their tracks, deliberately stopping them from recognizing people.

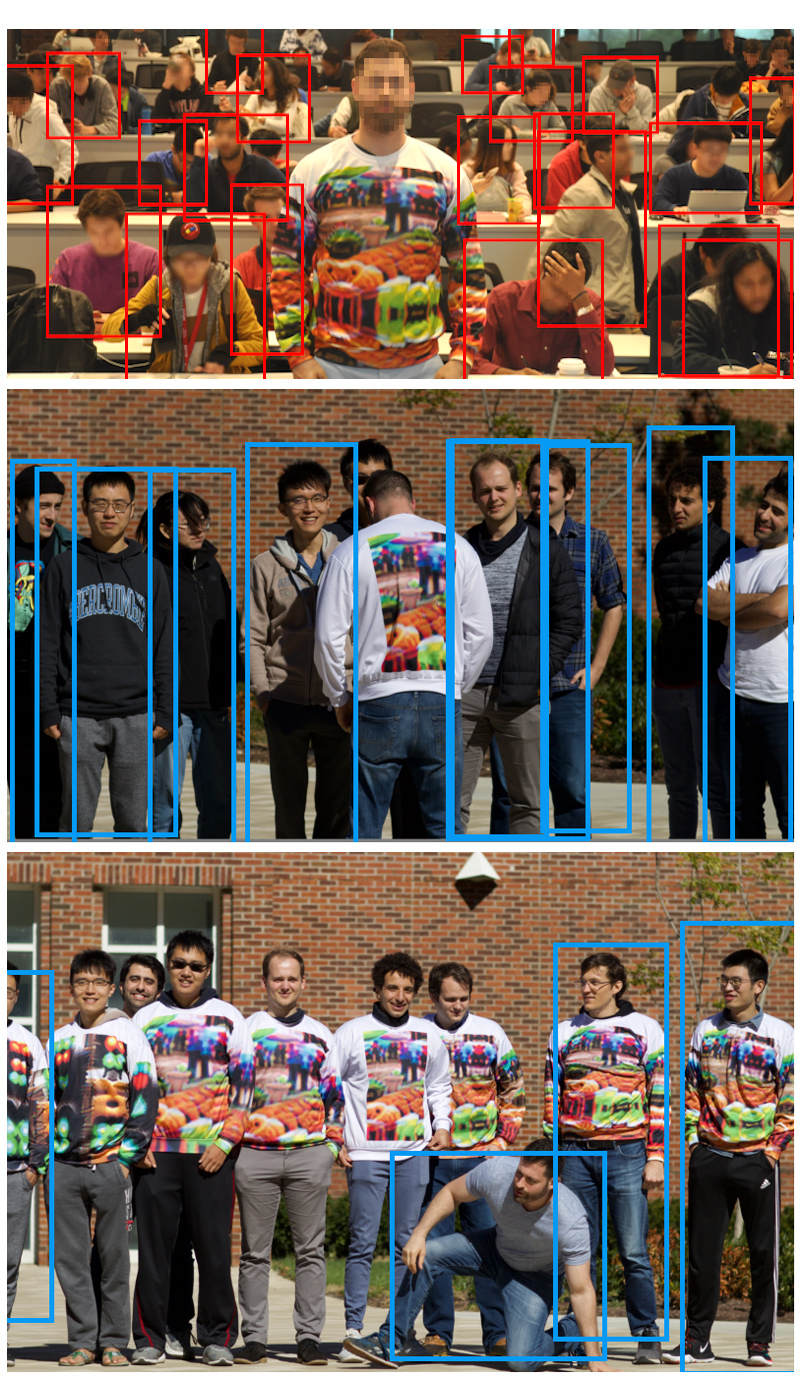

What the researchers did here, was creating a sweater.

But that sweater is no ordinary sweater, because it uses "adversarial patterns" that render its user "invisible."

According to the researchers on UMD’s Department of Computer Science web page:

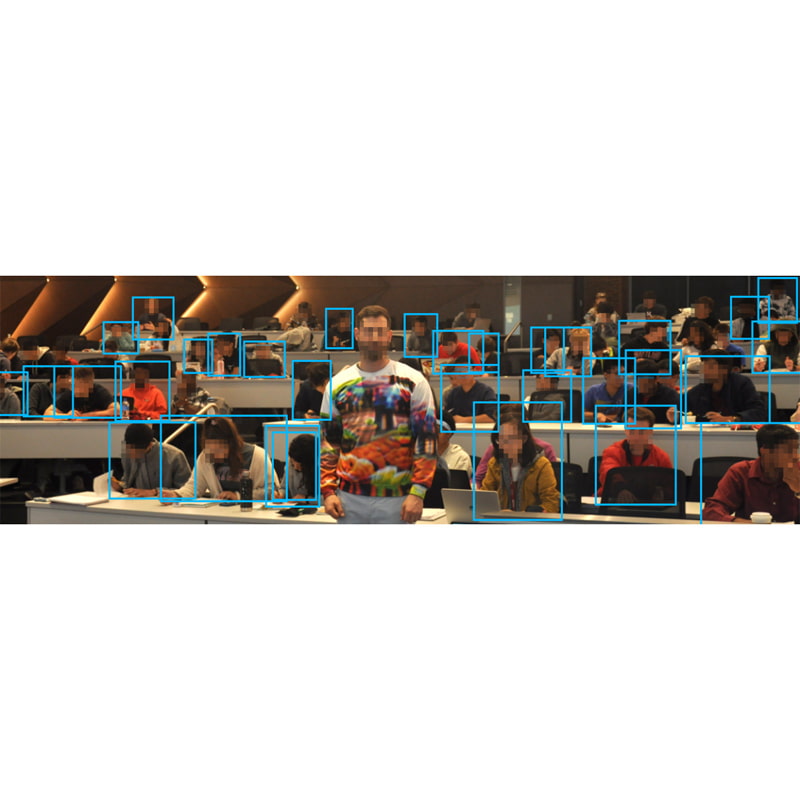

"In our demonstration, the YOLOv2 detector was able to fool the detector with a pattern trained on a COCO data set with a carefully constructed target," the researchers noted.

It all began when the researchers who also work with Facebook AI, started with testing machine learning systems for vulnerabilities.

The result was that, they found colorful print on clothes is able to render AI cameras useless.

According to their research paper, most research on real-world adversarial attacks on AI object detectors focused on classifiers, which assign a holistic label to an entire image, rather than detectors that localize objects within an image.

They said that AI detectors work by considering hundreds or thousands of "priors" (potential bounding boxes) within the image with different locations, sizes, and aspect ratios.

Since most adversarial attacks can only evade detection by one prior, detectors are hard to fool.

In order to properly fool the detectors, an adversarial example must be able to fool all priors.

Because of this, the process is a lot more difficult than fooling a single output of a classifier.

To create this adversarial pattern, the researchers used the SOCO dataset, in which the computer vision algorithm YOLOv2 is trained.

It was only after that, that the team created an opposite pattern.

It's this opposite pattern that is apparently the "adversarial pattern" needed to render AIs useless.

The team then transformed the adversarial pattern in question into an image, to then print it on a sweater.

As a result, anyone using the 'invisibility cloak' is imperceptible to detectors.

There is a significant drawback through.

It is said that tests found the pattern to only have a 50% success rate.