AI systems are only as good as the data we put into them.

Since humans are already biased and, in cases, also racists, we somehow inherit those traits to the AI systems we create.

AI and machine learning technology with deep learning have many advantages. But again, because the data is already flawed, the end product will always be flawed.

Since the advancement of AIs is inevitable, and in order to leverage AI for the better, we need to raise some questions about how we can put an end to these controversies. And to also know how to establish the criteria and standards to weigh the robustness and trustworthiness of AI algorithms that are helping or replacing humans in making important and critical decisions.

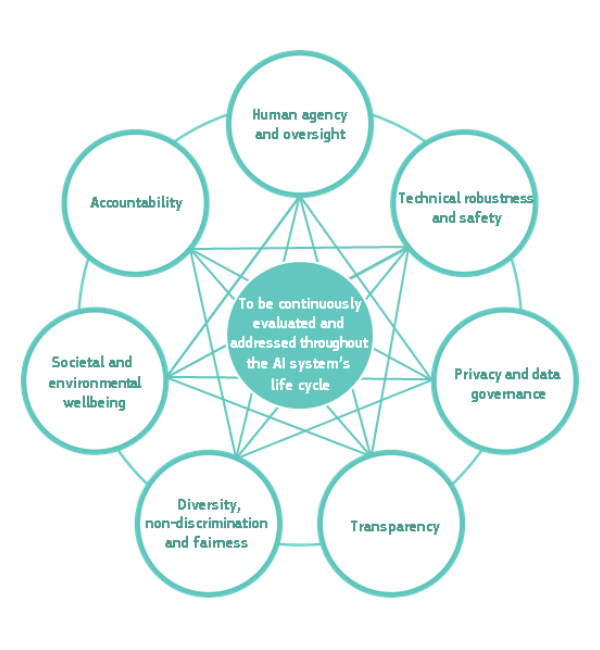

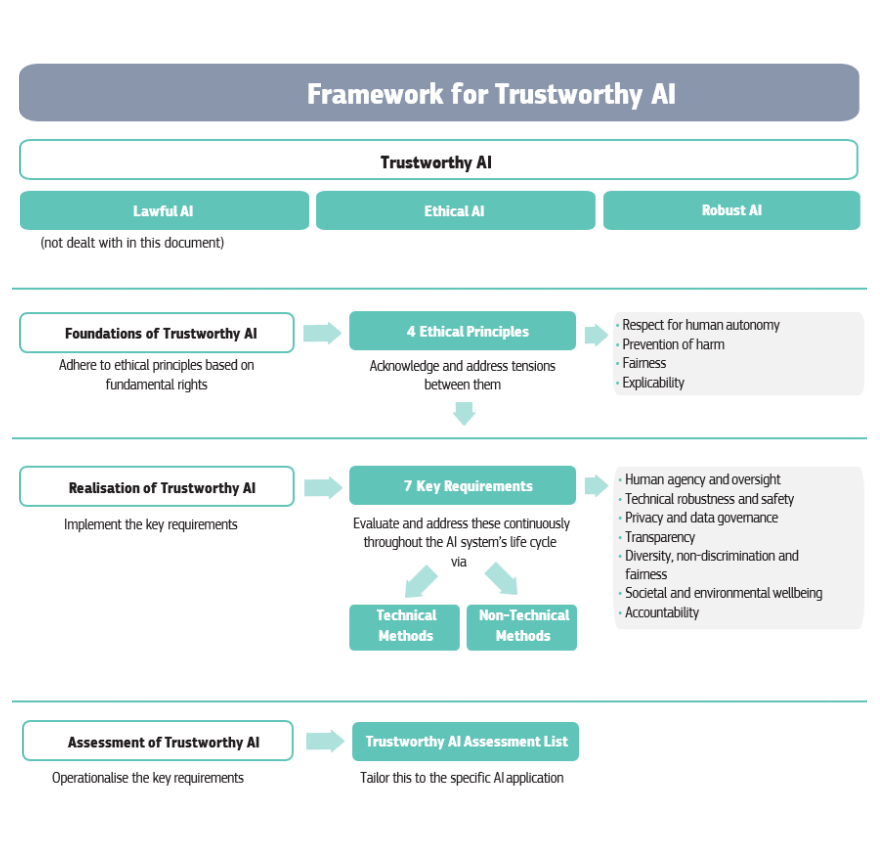

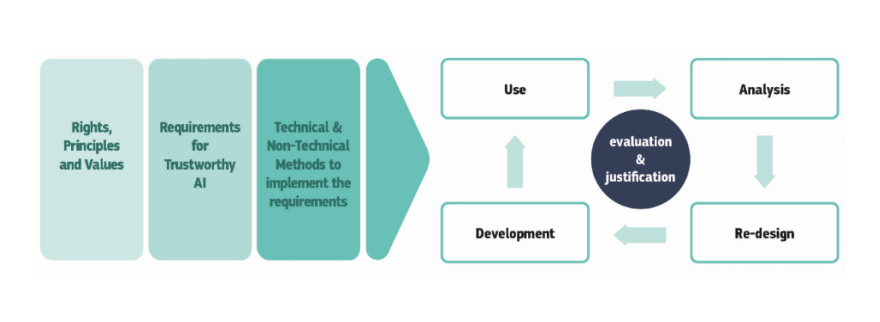

In a published document titled “Ethics Guidelines for Trustworthy AI”, the European Commission has laid out seven essential requirements for developing ethical and trustworthy AI.

While the human race is still having a lot to learn about the technology, EC’s guidelines provide some pointers to solve some of these issues.

According to the guidelines, trustworthy AI should be:

- Lawful - respecting all applicable laws and regulations.

- Ethical - respecting ethical principles and values.

- Robust - both from a technical perspective while taking into account its social environment.

1. Human Agency And Oversight

What this means, AI systems should both act as enablers to a democratic, flourishing and equitable society by supporting users' agency and fostering fundamental rights, and at the same time, allowing for human oversight.

Human agency means that users should have a choice not to become subject to an automated decision “when this produces legal effects on users or similarly significantly affects them,” according to the guidelines.

The challenge of this requirement is already happening with the AI systems found on social media networks, news feed, trending topics, search engine results pages, suggestion videos and so forth.

2. Technical Robustness And Safety

What this means, AI systems must reliably behave as intended while minimizing unintentional and unexpected harm. They also need to be able to prevent unacceptable harm to humans and their environment.

One of the greatest concerns of AI technologies include the threat of adversarial examples.

This kind of threat can manipulate the behavior of an AI system by exposing some small changes to their input data that are often invisible to humans. For instance, a malicious actor in changing the coloring and appearance of a stop sign in a way that will go unnoticed to a human, but can cause an autonomous car to ignore the sign and cause safety threat.

This happens mainly because AI algorithms work in ways that are fundamentally different from the human brain.

The EC document recommends that AI systems should be able to fallback from their machine learning to rule-based systems, or at least ask for a human to intervene.

3. Privacy And Data Governance

According to the EC, AI systems should guarantee privacy and data protection throughout a system’s entire life cycle. This includes everything from the information initially provided by the user, to the information generated about the user over the course of their interaction with the system.

This is difficult because by nature, machine learning technology hungers for data. After all, AI is only as good as the data it learns from, meaning that it needs to learn from a ton of data before being able to do something intended.

Since the more the quantity of data, the more quality they should have, resulting to a more accurate AI, this is one main reason why companies using AI have the tendency to collect more and more data from their users.

Companies like Facebook and Google have built their empires by building and monetizing comprehensive digital profiles of their users, to then use this data to train their AI technologies to create personalized contents, experiences and ads to their user.

But unfortunately, these companies are not very responsible for maintaining the security and privacy of their users' data. What's more, in many cases, real human beings are involved in labeling users' personal data.

One example was when it was revealed that Amazon hired thousands of employees across the world to access voice recordings of its Echo smart speaker to help improve the company's digital assistant Alexa.

4. Transparency

The EC document defines AI transparency in three components: traceability, explainability and communication.

AI systems that are based on machine learning and deep learning are highly complex. They can be smart and useful because they learn from data, and correlate the patterns from them in their many training attempts. However, these intelligent machines work in ways that even their developers don't have the knowledge to explain.

The creators of these algorithms often don’t know the logical steps that happen behind the decisions of their AI models. This makes it very hard for them to find the reasons behind the errors these algorithms make.

EC specifically recommends that developers of AI systems should document every development process, the data they use to train their algorithms, and explain their automated decisions in ways that are understandable to humans.

Another important point raised in the EC document is communication is that, "AI systems should not represent themselves as humans to users; humans have the right to be informed that they are interacting with an AI system."

In an example, Google Duplex which uses AI to place calls on behalf of its users, can make restaurant and salon reservations. The controversy is that, the assistant refrained from presenting itself as an AI agent and fooled the person on the phone into thinking that they were speaking to a real human.

5. Diversity

Biases in AI's algorithms happen frequently. For a long time, we humans believe that AI would not make subjective decisions based on bias. However, with machine learning algorithms, AI systems can develop their own behavior from their training data, and they can reflect what they learned by amplifying the biases contained in those data sets.

For example, AI systems tend to put male as more dominant than female, preferring white and light skin if compared to black, and associating programming with men and homemaker with women.

This happens because AI models are often trained on data that is publicly available, and this data often contains hidden biases that already exist in the society.

To prevent unfair this kind of bias against certain human groups, EC's guideline recommends AI developers to make sure their AI systems’ data sets are inclusive. And to solve hidden biases, EC recommends for companies that develop AI systems to hire people from diverse backgrounds, cultures and disciplines.

6. Societal And Environmental Well-Being

The social aspect of AI has been deeply studied.

For example, social media companies on the web use AI to study the behavior of their users in order to provide them with personalized contents. By making experience uniquely tailored for each and different user, this makes social media addictive, and for the companies behind them, making them profitable.

The disadvantage of this is that, it causes users to be less social, less happy and less tolerant toward opposing views and opinions.

Some companies, like Facebook, have acknowledge this and tried to correct the situation by making some changes to its News Feed algorithm so it would surface more posts from friends and family and less from brands and publishers.

The next is the environmental impact of AI. Equally important, AI systems that run the cloud consumes a lot of electricity. This essentially makes them a huge creator of carbon footprint. In the era where the society is moving forward towards greener alternatives, companies that use AI algorithms in their application can make the problem go from bad to worse.

One of the solutions is to use lightweight AI solutions that require very little power and run on renewable energy. Another solution is to use AI to help improve the environment. For instance, humans can train AI systems to help them manage traffic and public transport to reduce congestion and carbon emissions.

7. Accountability

What this means, there should be a legal safeguards to make sure companies keep their AI systems obey ethical principles.

The U.S., the UK, France and some others have created regulations and legislation to hold companies to account for the behavior of their AI models.

End users are often subjected as test subjects. But to create ethical AI systems, these users have the rights to say "no". Here, users should also be able to sue the companies in charge behind the systems, if their product causes harm or damage.

For many reasons, accountability may not be in line with companies' business model and interest. But if we ever want to coexist with intelligent machines that will decide what's what, that’s why there should be oversight and accountability.

“When unjust adverse impact occurs, accessible mechanisms should be foreseen that ensure adequate redress. Knowing that redress is possible when things go wrong is key to ensure trust,” the EC document states.