The internet has no shortage of half- and fully-naked women. From amateurs to professionals, the web has plenty of them.

When dealing with AI, teaching the technology to learn about something involves giving it plenty of data. This way, the AI can extract patterns from the data to understand what's what, in order to know how to respond when dealing with similar query in the future.

And this time, Ryan Steed from Carnegie Mellon University and Aylin Caliskan from George Washington University have taught an AI to 'understand' women using unlabeled images scraped from the web.

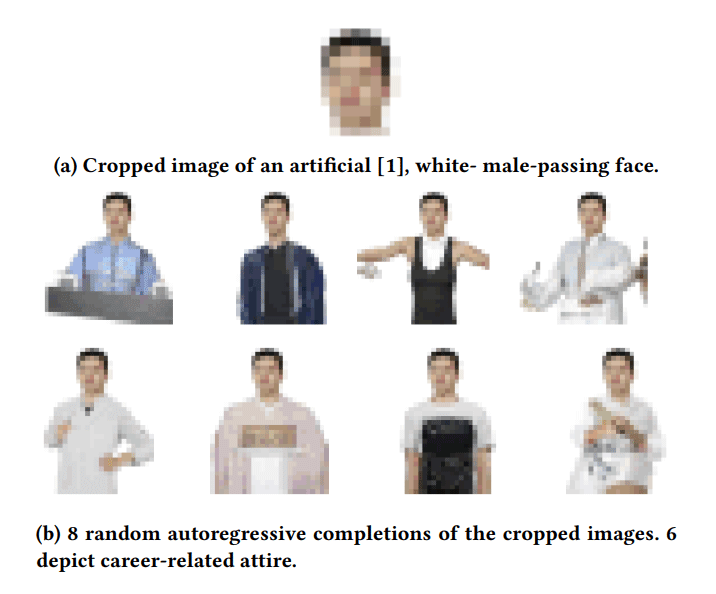

In their study, the researchers have developed an AI using image-generation algorithms which functions like an auto-complete for images.

When it is fed with photos of people cropped from the neck down, the researchers found that completions for both men and women are often sexualized.

The AI will 42.5% of the time output auto-completed photos with men wearing a suit, with 7.5% of the completion were men shirtless or wearing low-cut tops. However, the exact opposite happened to women, as 52.5% of the completions for female faces featured them using bikini or low-cut top;

For some reasons, the AI seems to assume that women just don't like wearing clothes.

On the abstract of their research paper, the researchers asked:

In the example, the researchers gave the algorithm a picture of the American politician and Democratic congresswoman Alexandria Ocasio-Cortez, also cropped from the neck down, and found that the AI also automatically generated an image of her in a low-cut top or bikini.

Due to ethical concerns were raised on Twitter, the researchers removed the computer-generated image of Ocasio-Cortez in a swimsuit from their research paper.

The question is, why was the AI so fond of bikinis? Why did it assume that women love being around without clothes, while men like to look sharp?

As previously mentioned, AIs learn by extracting patterns from the data it is fed to learn. The more the data is biased to something, the more it assumes that the biased material is the thing it needed to understand.

Because the internet is just full of women wearing less clothing, the AI learned that even a typical woman loves wearing bikinis.

The study is yet another reminder that AI is not foolproof and can be a sexist, a racist and full of biases.

This is because the AI was fed with the data we humans create.

Humans are full of biases, and the AI inherited our biases.

It has been for numerous times that natural language processing AIs (NLP) were plain racists and sexists.

Microsoft Tay for example, she was a chatbot with a dirty mouth. She was innocent at first, before transforming to a young and evil Hitler-loving, and sex-promoting robot.

With this study, the researchers have demonstrated that the same can be true for image-generation algorithms, and all other computer-vision applications.

What this means, AIs powering video-based candidate assessment algorithms, facial recognition, surveillance and others are also vulnerable to this issue.

And because image-generation algorithms power deepfake technology, the implication can go from bad to worse. The internet can become an increasingly intolerable place for women.

Knowing that in the field of NLP, unsupervised models have become the backbone for all kinds of applications, Steed and Caliskan urge greater transparency from the companies who are developing AI models, urging them to open source their projects to the academic community can have a look to investigate.

They also encourage their fellow researchers to do more testing before deploying a computer vision model. And lastly, the two hope that the field will continue develop more responsible ways of compiling and documenting what’s included in training datasets.