While researchers work day and night, most AI buzzes happened mostly within its own field, and rarely reach far beyond its own realm.

This changed when OpenAI introduced ChatGPT. The AI chatbot quickly captivated the tech world with its ability in doing a wide range of tasks, including writing poetry, technical papers, novels, and essays.

OpenAI created a new industry, and the hype it brought sent many others scrambling for solution.

With AI chatbots' popularity becoming evident, one firm founded by ex-OpenAI researchers has a different approach.

Rather than making its generative AI conversational and engaging, Anthropic is teaching its AI to have a conscience.

Anthropic introduced that it calls the 'Claude' AI, which is built with what its makers call a "constitution."

In the announcement, the target is to create "an AI system that is helpful, honest, and harmless," in order to ensure that bots are not only powerful, but ethical as well.

In this post, we explain what Constitutional AI is, what the values in Claude’s constitution are, and how we chose them: https://t.co/eLtcqG7MuQ

— Anthropic (@AnthropicAI) May 9, 2023

Jared Kaplan, a former OpenAI research consultant who went on to found Anthropic in 2021 with a group of his former co-workers, said that Claude is, in essence, capable of learning right from wrong.

This is possible because its training protocols are "basically reinforcing the behaviors that are more in accord with the constitution, and discourages behaviors that are problematic."

According to Kaplan, his company is trying to find practical engineering solutions to the sometimes fuzzy concerns about the downsides of more powerful AI.

"We're very concerned, but we also try to remain pragmatic," he explained.

This method includes giving Claude a series of guidelines drawn from the United Nations Universal Declaration of Human Rights and suggested by other AI companies, including Google DeepMind.

The constitution also includes principles adapted from Apple’s rules for app developers, which bar "content that is offensive, insensitive, upsetting, intended to disgust, in exceptionally poor taste, or just plain creepy," among other things.

The constitution includes rules for the chatbot, including "choose the response that most supports and encourages freedom, equality, and a sense of brotherhood"; "choose the response that is most supportive and encouraging of life, liberty, and personal security"; and "choose the response that is most respectful of the right to freedom of thought, conscience, opinion, expression, assembly, and religion."

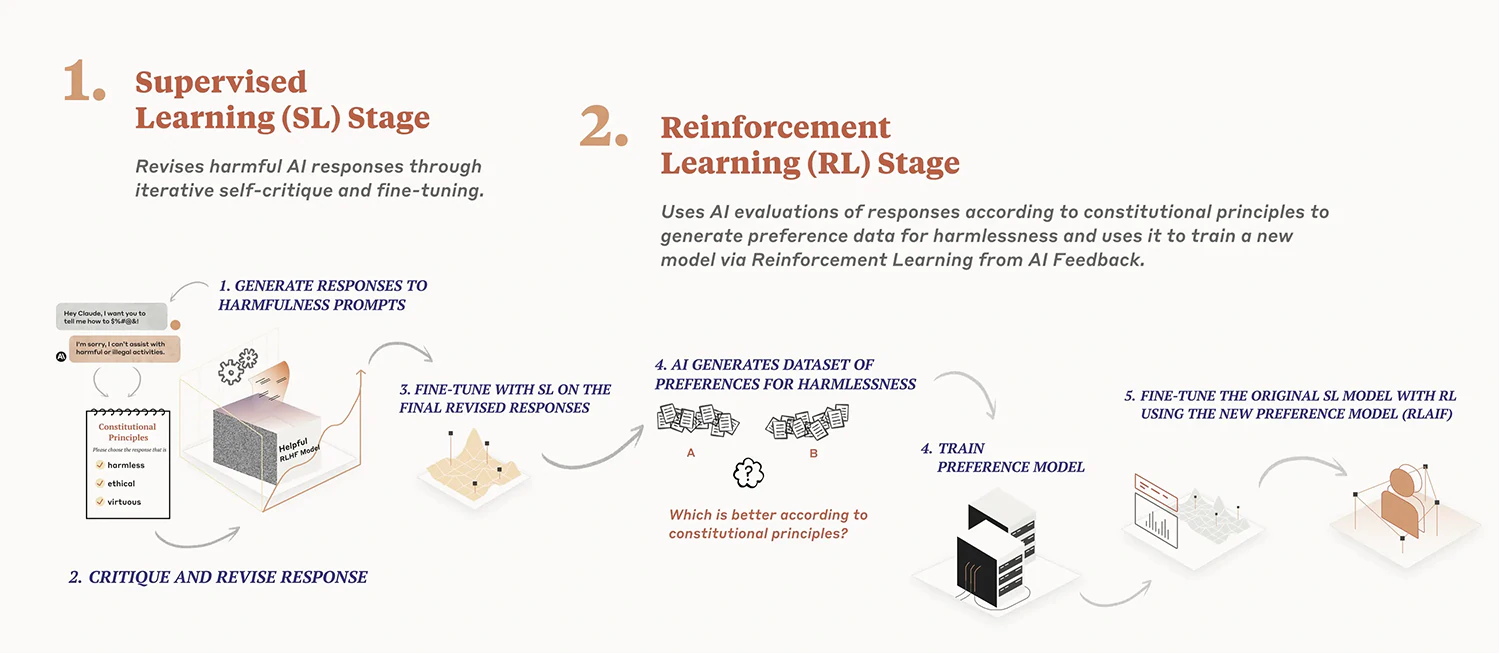

Anthropic’s constitutional approach operates over two phases.

In the first, the model is given a set of principles and examples of answers that do and do not adhere to them. In the second, another AI model is deployed to generate more responses that adhere to the constitution, and this is used to train the model instead of human feedback.

While Anthropic’s approach doesn’t instill an AI with with the rules it cannot break, Kaplan said that the method is more effective way to make a system like a chatbot less likely to produce toxic or unwanted output.

He said that the move may be small, but should be a meaningful step toward building smarter AI programs that are less likely to turn against their creators.

Anthropic’s approach comes just as the development of generative AI has shown impressive chatbots, but significant flaws.

"The strange thing about contemporary AI with deep learning is that it’s kind of the opposite of the sort of 1950s picture of robots, where these systems are, in some ways, very good at intuition and free association," Kaplan said.

"If anything, they’re weaker on rigid reasoning."

Anthropic says other companies and organizations will be able to give language models a constitution based on a research paper that outlines its approach.

The plan is to develop a method with a goal of ensuring that even as AI gets smarter, it does not go rogue.

Introducing 100K Context Windows! We’ve expanded Claude’s context window to 100,000 tokens of text, corresponding to around 75K words. Submit hundreds of pages of materials for Claude to digest and analyze. Conversations with Claude can go on for hours or days. pic.twitter.com/4WLEp7ou7U

— Anthropic (@AnthropicAI) May 11, 2023

Beyond that, Anthropic also announced that it has significantly expanded the amount of information Claude is able to process.

Claude has gone from having a limit of 9,000 tokens to 100,000 tokens, which corresponds to roughly 75,000 words. What this means, Claude can process an amount of text that is equivalent to a whole novel in length.

And the company also said that Claude can read and analyze information from that many words in under a minute.

In an example, Anthropic loaded Great Gatsby onto the AI during testing and modified a single line to say Mr. Carraway was "a software engineer that works on machine learning tooling at Anthropic." Claude was able to spot how the book was modified within 22 seconds.

Generative AIs like Claude are still limited by the number of "tokens" they can process.

Tokens in generative AIs are like pieces of words. For generative AIs to work, they have to break down the sentences users' queried to it into words, in order to process them. This way, not only that sentences are chopped up from the start to the end, because the method also considers spaces and other characters.

At this time, OpenAI's standard GPT-4 model is capable of processing 'only' 8,000 tokens, while an extended version can process 32,000 tokens.

Meanwhile, the publicly-available ChatGPT chatbot that is free, has a limit of around 4,000 tokens.

What this means, Anthropic's Claude is blowing ChatGPT in the dust, in terms of number of tokens it can process.

Following the announcement, Claude expanded capability is available to Anthropic's business partners who are using its API. Anthropic said that the capability should help businesses quickly digest and summarize lengthy financial statements and research papers, assess pieces of legislation, identify risks and arguments across legal documents and comb through dense developer documentation, among other possible tasks.