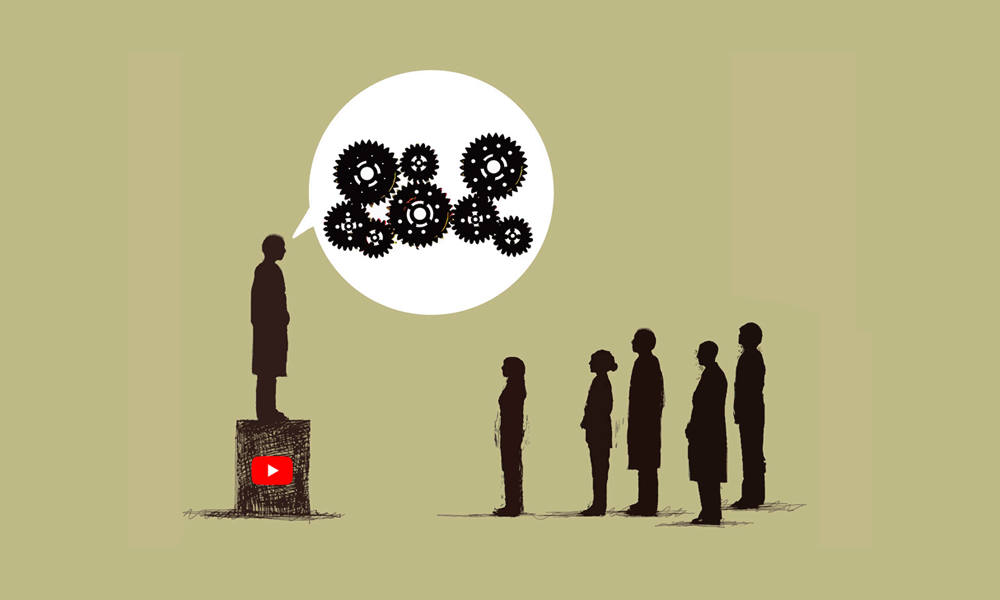

The Earth is flat, or not? One person's opinion can differ from others, but spreading conspiracy is no longer welcome on YouTube.

The popular video-streaming and sharing platform announced that it is changing its algorithms, so conspiracy theory videos no longer show as recommended.

The platform further stated that these kind of videos are close to violating its community guidelines for being inaccurate, either historically or medically.

Examples of videos that come close to violating Youtube’s community guidelines and fall under the category of videos are no longer recommended, are flat Earth theories, others like miracle remedies for different ailments that have no scientific information to back up their claims are also in the list.

With the change, YouTube's algorithms won't anymore surface videos that contain inaccuracies.

According to YouTube on its blog post:

"To that end, we’ll begin reducing recommendations of borderline content and content that could misinform users in harmful ways—such as videos promoting a phony miracle cure for a serious illness, claiming the earth is flat, or making blatantly false claims about historic events like 9/11."

Yes, people have their own thoughts, and that they are free to share their opinion to the public. But YouTube here feels that videos promoting conspiracies can mislead people. These videos can also spread hatred and fake news.

Seeing them having no substantial or helpful information for anyone, and also because the company is continuing its battle against misinformation, it starts removing them from its recommendation algorithm.

The algorithms is also fine tuned to avoid recommending repeated contents.

This will be beneficial because users will only need to watch one version the video. The example used in the blog post talked about recipe videos. In which case users are less likely to need multiple versions of the same information.

With these videos are barred from the site, YouTube estimated that no more than 1 percent of its contents are impacted, believing "that limiting the recommendation of these types of videos will mean a better experience for the YouTube community."

The content are still be there. However, the site won’t be recommending them to users who watch similar videos.

"We think this change strikes a balance between maintaining a platform for free speech and living up to our responsibility to users," continued YouTube.

The algorithms rely on a combination of machine learning and real people. YouTube works with human evaluators and experts to help train the machine learning systems that generate recommendations.

These evaluators are trained using public guidelines and provide critical input on the quality of a video.

The algorithm changes will be implemented on a gradual basis. They won’t offer a noticeable impact, but will certainly help YouTube in filtering contents it think aren't appropriate.