Spam and scams are regarded inappropriate, and this is why YouTube is removing them as it sees them.

In Google's ongoing battle against inappropriate content on its video platform, the company said that in Q3 2018, it removed 50.2 million videos due to a channel-level suspension and violations of its community guideline.

As a result, both large and small YouTube video creators may have their subscribers count decreased.

"You may see a noticeable decrease in your subscriber count as we remove spam subscriptions from your channel," the company wrote in a post.

YouTube said that the move is part of a routine maintenance the company performs. Removing spam accounts helps to keep YouTube "a fair playing field" for creators.

"We regularly verify the legitimacy of accounts and actions on your YouTube channel," the post reads. "As part of these regular checks, we identified and will remove a number of subscribers that were in fact spam from our systems."

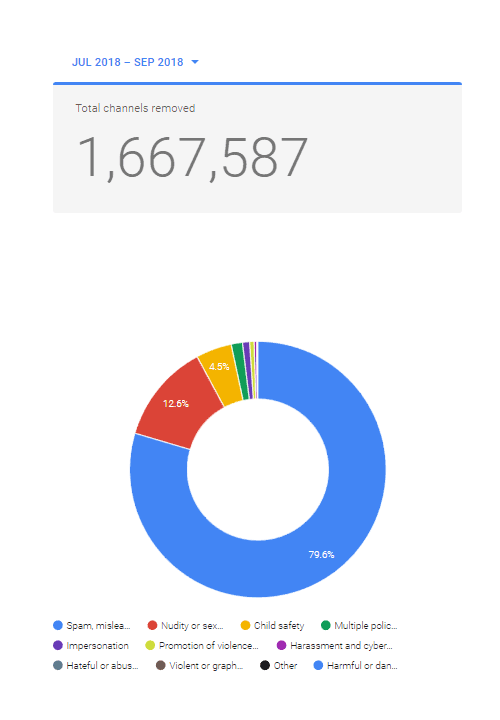

In the sweep, YouTube also said that it removed more than 1.6 million channels. Out of those channels removed, 79.6 percent were for spam or scams.

Of all the removed content, 10.2 percent of videos were removed for child safety.

YouTube also said to have removed more than 224 million comments.

On the YouTube Community Guidelines enforcement, the video streaming site said that it relies on "a combination of people and technology" to flag inappropriate content and enforce these guidelines.

"We also filter comments which we have high confidence are spam into a ‘Likely spam’ folder that creators can review and approve if they choose," said YouTube.

The blog post specifically stated that spam accounts are usually used to make a channel look more popular than it actually is.

Popular channels may notice a decrease, affecting their ongoing competition, but it’s those smaller creators who face the biggest risk, since they could lose their ability to monetize videos.

"Channels that had a high percentage of spam and fall below 1,000 subscribers will no longer meet the minimum requirement for YPP [YouTube Partner Program] and will be removed from the program," YouTube continued.

"They are encouraged to reapply once they’ve rebuilt their subscribers organically."

To notify those affected video creators, YouTube will let them know by showing a banner.

"We will be unwavering in our fight against bad actors on our platform and our efforts to remove egregious content before it is viewed," the company's post continued. "We know there is more work to do and we are continuing to invest in people and technology to remove violative content quickly."

To initiate the attempt, YouTube used the combination of machine learning technology and human monitors to remove those inappropriate videos before they spread on the platform, with 74.5 percent of the videos flagged by its automated system between July and September were removed before they received any views.