Artificial Intelligence (AI) was having a good year in 2017, with many researchers racing to advance the technology.

With machine learning systems becoming more capable, researchers have discovered they aren't that smart after all. One reason is that they lack human's common sense.

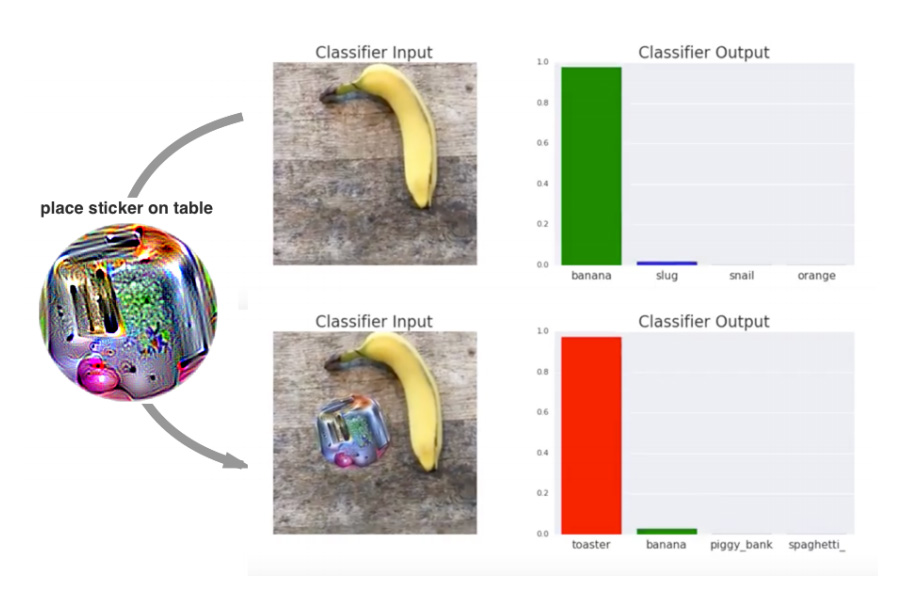

Using a specially designed and printed stickers, the researchers were able to trick an image recognition system, making it fail to see anything else but the stickers.

The reason here is because computer vision is complex.

The research was done by a group of researchers at Google. They accomplished it by training an adversary system to create small circles full of features that distract the target system. After trying out many configurations of colors, shapes and sizes, and found sets of images which can attract the attention of the image recognition system.

They come in the form of sticker-like 'psychedelic' images with specific curves and colors.

These stickers deliberately changes the AI's focus. The image recognition system that sees the combination of the colors and curves, will see something other than the object that to humans, is prominent.

Related: How Artificial Intelligence Can Be Tricked And Fooled By 'Adversarial Examples'

In order for computer vision to work, computers rely on cognitive shortcuts. These things are difficult for humans to see, but for computers, they need them in order to find patterns.

One of the shortcuts, is to not assign every pixels the same importance.

For example, a picture of a scenery with a bit of sky on top, a grassland at the bottom and a house in the middle. Computer vision needs to separate all the information to be able to understand what are the things in the picture. The basic rule here, is to make it clear for the computer so the it can find the objects it needs to see. In this case, they are the sky, grass and a house.

Here, the researchers mess with that shortcut using the sticker-like images, making the computer to ignore the thing it really needs to identify. The stickers here, act like an object to alter the AI's focus - making it hallucinate things.

This is done on a system-specific, not image-specific basis. What this means, the resultant scrambler patch will generally work no matter what the image recognition system is looking at.