With the many ways researchers want to mimic AIs with the human brain, they also found the many ways the two are different.

Humans can easily learn and recognize objects, to then recall them flawlessly in the future. All they need is just a few illustrations and examples. Computers on the other hand, need a lot more than just plain examples, as they require to 'feed' on huge data sets to just describe what's what.

This is why in many cases, image recognition AIs can still be confused when trying to recall things. The reason is because AI isn't that good at understanding what it sees, unlike humans who can use contextual clues to identify things.

In an attempt to create better AIs in the future, researchers create 'ImageNet-A'.

ImageNet-A is just a small subset of ImageNet, an industry-standard database containing more than 14 million hand-labeled images in over 20,000 categories.

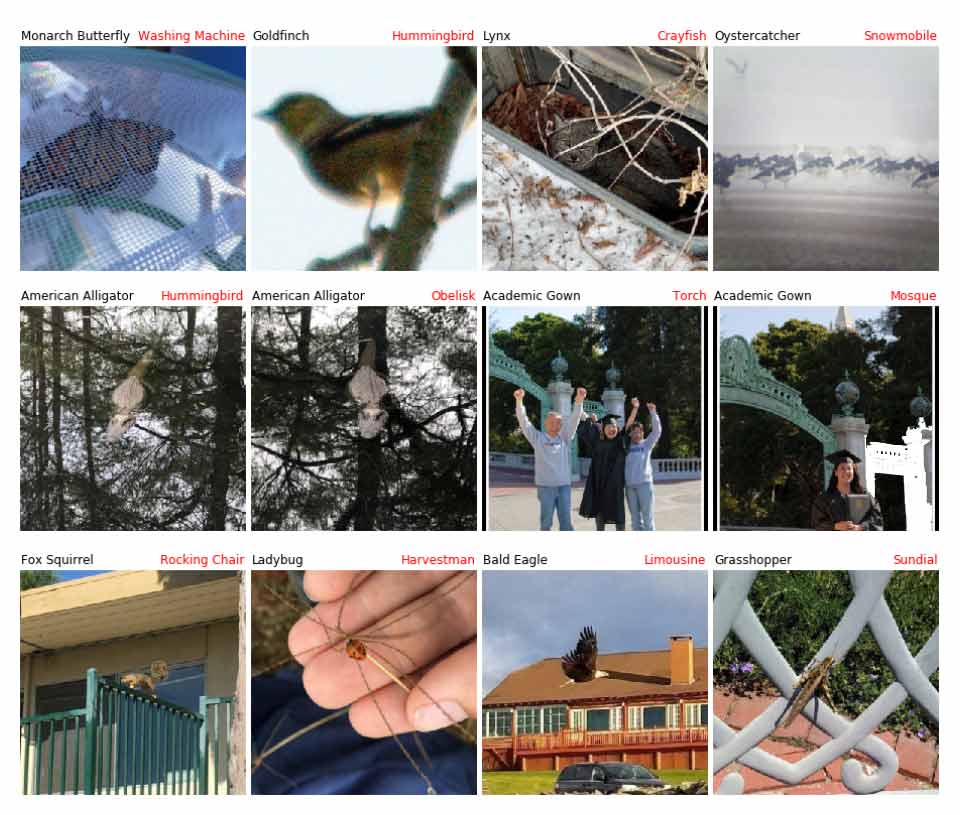

But what makes it different is that, ImageNet-A is full of images natural objects, made to fool fully-trained AI models.

What needs to be highlighted here is that, the images in ImageNet-A aren't manipulated. Meaning that they don't have any invisible additions to trigger any planned 'adversarial attack'.

This is an important distinction because researchers in the past have proven that modified images can fool AIs too. Adding noise or other invisible or near-invisible manipulations, for example, can fool most AIs.

All images in ImageNet-A showcase 'natural adversarial examples' (PDF), which are real-world, unmodified, and naturally occurring examples that cause AI classifier's accuracy to significantly degrade.

And here, the researchers show that the images can confuse computer vision models, for about 98% at the time.

ImageNet-A has images that expose machine learning technology to it's biggest weakness: using classifiers that are over-reliant on color, texture, and background cues.

According to the research team, which was led by UC Berkeley PhD student Dan Hendrycks, it’s much harder to solve naturally occurring adversarial examples than it is the human-manipulated variety.

Read: How "Psychedelic Stickers" Can Make AI Experience Delusional Hallucinations

ImageNet is the created by a former Google AI specialist Fei Fei Li.

She started working on the project in 2006, and finished it on 2011.. At first, the best score the teams achieved was about 75% using ImageNet data set. Improving the data, the teams managed to achieve higher than 95 percent accuracy in 2017.

But then, when adversarial attack methods were discovered, researchers realize that even the smartest image recognition AIs can be fooled quite easily.

By just adding some cues that are invisible to humans but very visible to computer vision, people can easily trick AIs into see what isn't present. An example would be when a Tesla's Autopilot couldn't differentiate a white trailer from a cloud, resulting in the first fatal crash involving an autonomous car.

This prompted researchers to realize that a confused AIs can kill humans.

After realizing that AIs can be confused when seeing untouched nature photos, this sparked the idea to create ImageNet-A.

The ImageNet-A database here provides a data set for researchers to work with to improve AIs' image recognition systems.

But still, the data set is not the ultimate solution. Researchers are still required to teach computers to be more accurate when they are less certain.

For example, if an image recognition confuses between "cat" and "not cat", researchers need to come up with a way to make computers explain why they’re confused.

ImageNet-A is a step up to previous attempts for creating data sets dedicated to train AIs. But before computers can be taught to explain their thoughts, the roads for creating human-like AIs are still a long way to go.

Further reading: The Two Common Security Threats Unique To Artificial Intelligence