With the advancing technology, computer hardware has become smaller and more powerful. But to pursuit low-latency, things should go beyond that.

For Artificial Intelligence (AI) for example, computing needs to be fast, especially when the world is welcoming armies Internet of Things (IoT) devices in coming years.

This is where Nvidia launches its EGX Platform to bring real-time AI to the edge of the network.

The EGX is a "hyperscale cloud-in-a-box" that combines the full range of Nvidia AI computing technologies with Mellanox security, networking, and storage technologies.

It is meant to provide accelerated computing at the edge for low-latency transactions, or with minimal time delays between interactions.

What this means, Nvidia wants AI computing to happen where sensors collect data before it is sent to cloud-connected data centers. This should help real-time reactions from data pouring in from sensors on 5G base stations, warehouses, retail stores, factories and beyond.

Nvidia showed the platform at the Computex event in Taiwan.

According to Nvidia's senior director of enterprise and edge computing, Justin Boitano:

But by bringing data closer to sensors, that would translate to an exponential increase in the amount of raw data that has to be analyzed.

"We will soon hit a crossover point where there is more computing power at the edge than in data centers," Boitano continued.

This is why to supplement the move, Nvidia and its customers are wanting to offer a next generation class of servers for instant AI on real-time streaming data in markets such as telecommunications, medicine, manufacturing, retail, and transportation.

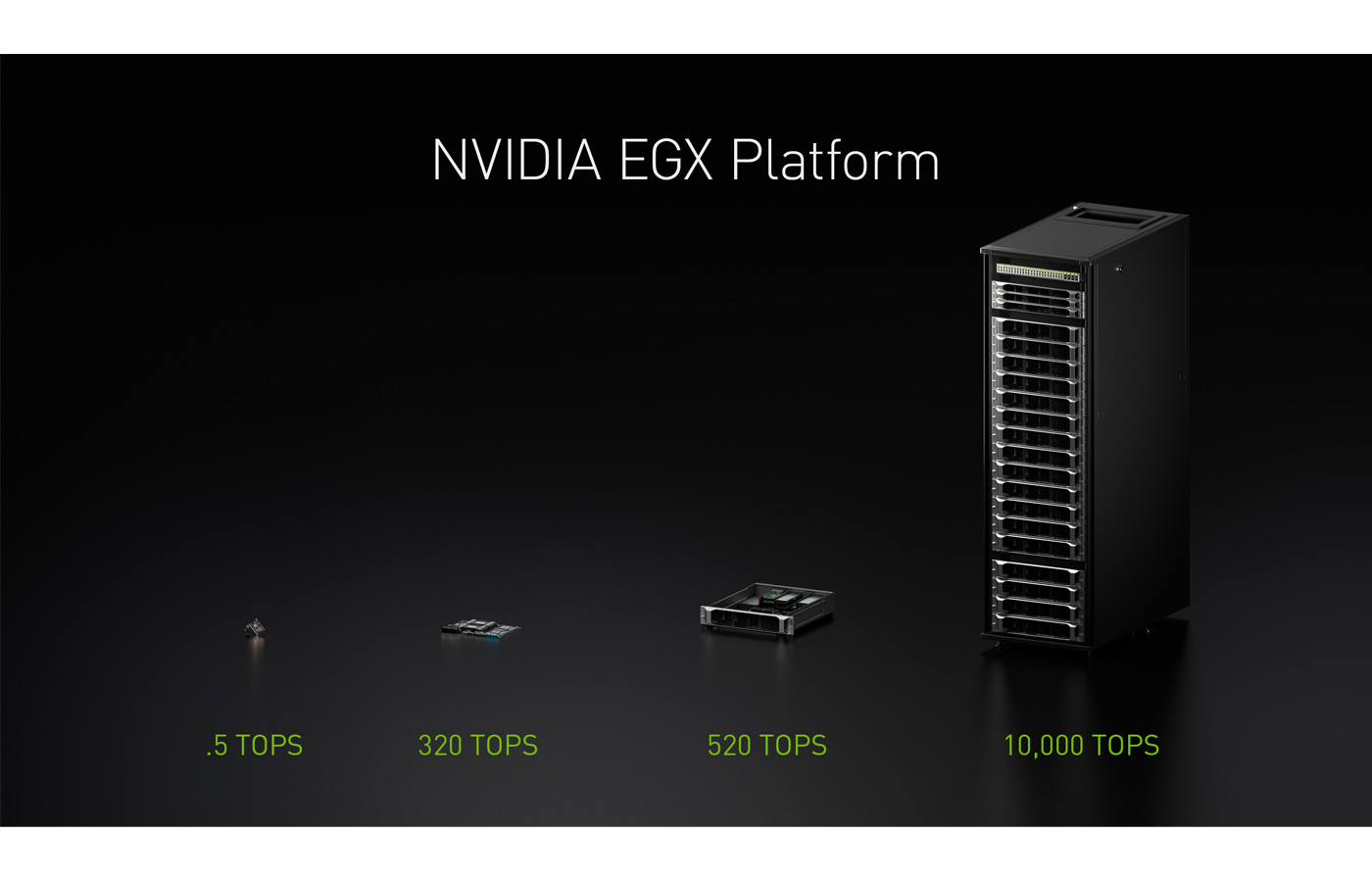

According to Nvidia, the EGX is scalable.

It starts with the tiny Nvidia Jetson Nano, which in a few watts can provide one-half trillion operations per second (TOPS) of processing for tasks such as image recognition.

On the end of the scale, there is a full rack of Nvidia T4 servers, capable of delivering more than 10,000 TOPS for real-time speech recognition and other real-time AI tasks.

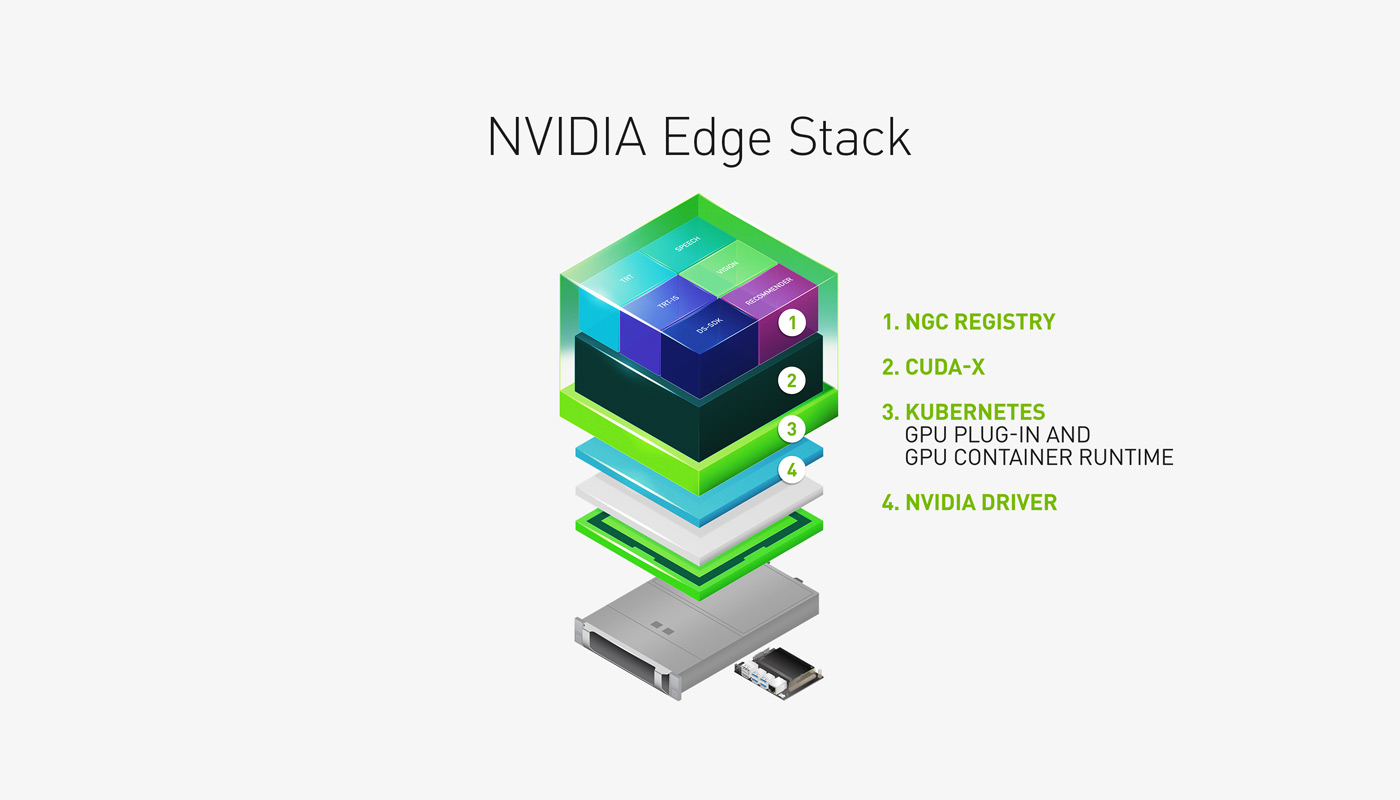

Nvidia EGX is built for enterprise and industries, optimized for running enterprise-grade Kubernetes container platforms such as Red Hat Openshift. These container platforms have been tested with Nvidia Cloud Stack, an optimized software suite, simplified setup, provisioning and management of GPU accelerated infrastructure for running Tensor RT, Tensor RT Inference Server, and NGC Nvidia GPU Cloud registry.

By combining Nvidia EGX, Red Hat OpenShift, and Nvidia Cloud Stack, enterprises can easily stand up a state-of-the-art edge to cloud infrastructure, Nvidia said.

To enable hybrid cloud computing, Nvidia EGX-powered systems and devices can connect to cloud IoT services, randing from AWS IoT Greengrass to Microsoft Azure IoT Edge.

“Azure IoT Edge helps customers deploy cloud service to their IoT devices quickly and securely,” said Sam George, director of Azure IoT Edge, in a statement. “We look forward to supporting NVIDIA’s EGX edge platform on Azure IoT Edge devices so that customers can deploy AI workloads targeting EGX-compatible hardware.”

AI is one of the fields that is heavily developed and studied. To an extent, the advancements of AI surpass of that of CPUs.

Nvidia created EGX to meet the emerging needs of AI applications to serve what will eventually be trillions of devices streaming continuous raw sensor data.

Where there will be tons of data that needs to be processed, Nvidia is essentially readying itself to anticipate that orders-of-magnitude of data.

The platform is designed for emerging, high-throughput AI applications on the edge where data is sourced to achieve instant or guaranteed response time, but with reduced bandwidth thanks to the cloud.

With its Edge servers aimed to be distributed throughout the world, Nvidia aims to process data from these sensors in real time.

Early adopters include more than 40 industry-leading companies and organizations, such as BMW Group Logistics, Foxconn, GE Healthcare and Seagate Technology.