The AI field has developed very fast, and the hype seems to never fade.

OpenAI is one of the big players in the field. The company has had proven itself worthy in the industry, one of which, was when it created an AI capable of defeating the world's best Dota 2 players.

One of the most famous that came out of OpenAI, would be the GPT-2. And later, it's the GPT-3, its successor.

The technology is renowned for many outstanding feats.

For example, it managed to make up a hundreds of words article to review itself, and ran wild on Reddit to fool people, among others.

But the thing is, GPT-3 is also riddled with flaws. Or to be exact, the technology that can create strikingly human-like text, can also create toxicity.

And this time, OpenAI as its creator, said that its team has found a way to keep the toxicity at bay.

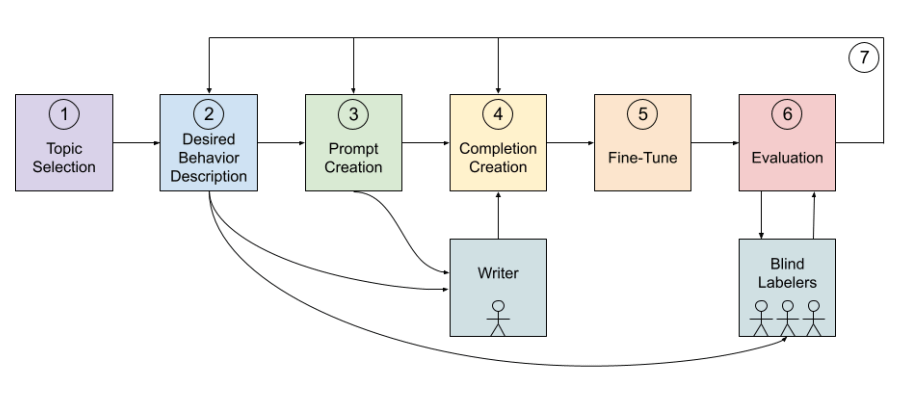

To do this, the AI should have its language model "behavior" altered through fine-tuning on a small, curated dataset of specific values.

The method aims to narrow down a language model’s universal set of behaviors to a more constrained range of values.

The technique is called the 'Process for Adapting Language Models to Society'. Or also called PALMS, it adapts the output of a GPT-3's pre-trained language model to only a set of predefined norms.

OpenAI explains this in a blog post:

The team then created a values-targeted dataset of 80 text samples, each of which was written in a question-answer format.

These prompts aimed to make the model demonstrate the desired behavior.

After that, the team can fine-tune their GPT-3 models on the revised dataset to evaluate the outputs.

The team said that the technique “significantly improves language model toxicity,” and has the most impact on behavior in the largest models.

"According to our probes, base models consistently scored higher toxicity than our values-targeted models," wrote the team at their study paper.

While the PALMS method can improve the behavior of the GPT-3, it should be noted that the approach is not intended to adapt GPT-3's outputs to only one universal standards.

PALMS only improves GPT-3's behavior in a given social context.

But still, the approach could help developers set their own values within the context of their apps.