We listen to songs whenever we can, part of which is to meet our current state.

For example, when we're happy, we tend to love happy songs to help cheering the euphoric moment; when we're sad, we may hear heartbreak songs to reflect our pain. However, as listeners, we can't always gauge a song's mood based on the lyrics.

And when we have a massive playlist on our hands, picking one song for the mood can be difficult.

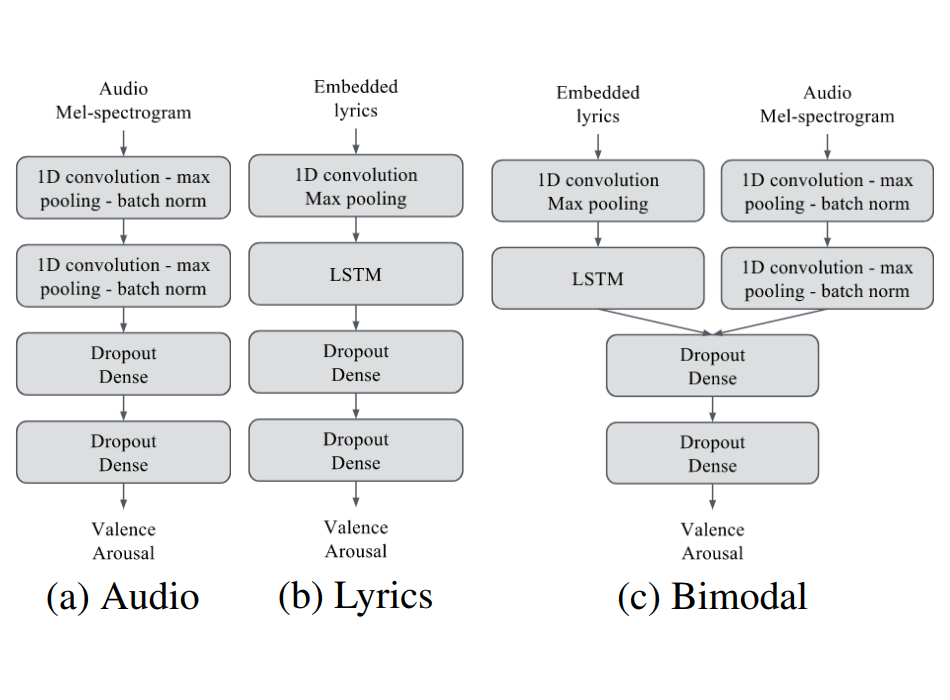

This is where researchers at Deezer have developed an AI that is capable of associating certain songs with moods and intensities. The AI is able to consider the totality of a song, before passing judgment, using a deep learning method that gauges a wide variety of data, not just a single factor like the lyrics.

The work is described in a newly published paper on Arxiv.org titled "Music Mood Detection Based on Audio Lyrics With Deep Neural Nets."

The researchers first fed the AI system with raw audio signals, alongside with the models that reconstruct the linguistic contexts of words. The researchers then taught it how to determine the mood of a song, using the Million Song Dataset (MSD), a collection of metadata for over 1 million contemporary songs.

In particular, they used Last.fm’s dataset, which already assigned identifiers to tracks from over 500,000 unique tags.

Many of these tags are mood-related, and over 14,000 English words from these tags were given two scale ratings correlating to how negative or positive a word is, and also how calm or energetic a word is in order to train the system.

MSD here only contains the metadata for songs, and not the songs themselves. So the researchers needed to pair all of this information to Deezer's own catalogue using identifiers like song titles, artist names, and album titles.

About 60 percent of the resulting dataset (18,644 tracks) was used to train the AI, with the rest used to validate and further test the system.

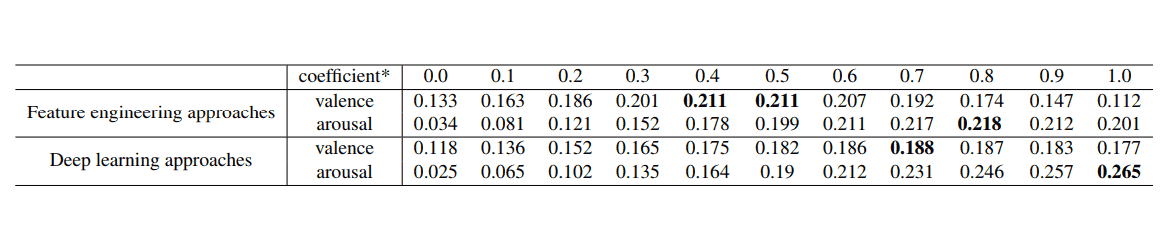

The initial result was the AI performing about the same when it came to detecting whether a song was positive or negative, if compared to previous and "classical approaches on arousal detection" that don't use AI. However, the system can detect how calm or energetic a song is, far better than other methods.

"It seems that this gain of performance is the result of the capacity of our model to unveil and use mid-level correlations between audio and lyrics, particularly when it comes to predicting valence," the researchers wrote in the paper.

The system is far from perfect. The researchers point out that if they had a database of labels indicating ambiguity of a track's mood, their results could be more accurate, as "in some cases, there can be significant variability between listeners" (people might not always agree on if a song is positive or negative, for example).

A "database with synchronized lyrics and audio would be of great help to go further," said the researchers.

The association between audio and lyrics helped it gauge the energy of a given piece more effectively than past techniques. This could help identify the difference between a soothing piece and an upbeat dance track, for example.

Ultimately, the researchers believe this work can pave further development for AI to understand how music, lyrics, and mood correlate, as well as the possibility of having deep learning models to sort through and find unlabeled data in high volume - not just with basic metadata, like the artist’s name or genre of music - but also from something more nuanced like mood.