Various researchers have been made into finding better peripherals.

But with the efforts and money spent on the development, nothing changed much since the invention of keyboard and mouse. While virtual keyboards and touchscreen display, voice-control and swipe-to-type methods have came along the way, none apparently can really replace the old full-sized QWERTY keyboard we all know.

This is where researchers Ue-Hwan Kim, Sahng-Min Yoo and Jong-Hwan Kim from the Korean Advanced Institute of Science and Technology (KAIST) decided to rethink the entire concept.

And that is by developing one that is fully-imaginary.

The product is called 'I-Keyboard', an eyes-free, AI-powered, invisible keyboard interface that positions itself based on where users choose to set they hands when they are ready to type.

No calibration needed.

According to the researchers' research paper:

Keyboards, both physical ones and virtual ones on touchscreen, are already ubiquitous devices.

With this I-Keyboard project, the team wants to eliminate keyboards' predefined layout, shape, and size. Leveraging AI, users should be able to start typing anywhere on the touchscreen, like they would on a normal keyboard, at any angle.

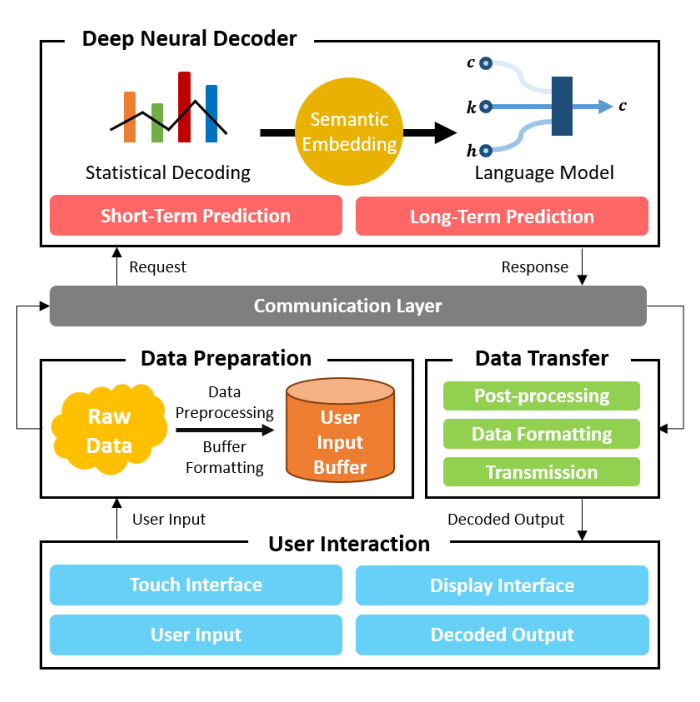

The researchers devised the I-Keyboard’s system architecture, which comprised three modules: a user interaction module, a preparation module, and a communication layer.

The first received input through a touchscreen or touch interface, while the data preparation module preprocessed and formatted raw inputs, and the communication layer tightly integrated the machine learning framework and app framework.

To make this possible, the team recruited 43 participants who regularly use both physical QWERTY keyboards and virtual keyboards on touchscreen mobile devices.

The team then asked users to assume that there is an existing keyboard on the touch screen and type as naturally as possible. The interface only contains the proceed and the delete buttons.

Next, the participants could start typing from anywhere on the typing screen Completing the task sentence activates the proceed button where participants can touch no more than the length of the task sentence. They can delete the touch points collected for the current sentence in case they feel they've made typing mistakes.

For the first 15 sentences, the participants familiarized themselves with the typing interface.

After the warm-up, users transcribed 150 - 160 sentences randomly sampled from Twitter and 20 Newsgroup datasets. In total, the data collection included 7,245 phrases and 196,194 key stroke instances.

In addition, the team randomly selected nine of the participants and asked them to evaluate the three types of feedback. Here, they typed ten phrases with each feedback, totaling to 30 phrases. After the typing session, the team collected three subjective ratings from the participants for each feedback.

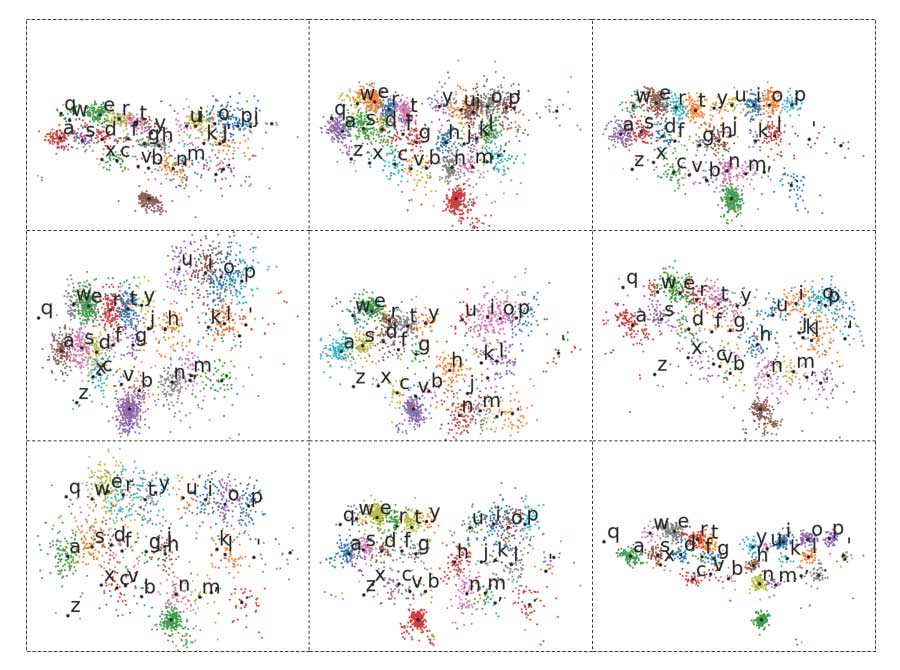

The team use user behavior analysis to exemplifies user mental models for the keyboard, and found that even if each participant recognizes the keyboard layout in mentally different ways, the patterns show that participants can type on touchscreens even though there is no visual and physical cues.

On the paper, the researchers proposed the efficient and effective translation of user inputs into character sequences, through a module that receives user input using touch interface and display interface to display the decoded sequence.

“[U]sers do not need to learn any new concept regarding I-Keyboard prior to usage. They can just start typing naturally by transferring the knowledge of physical keyboards,” they wrote. “[They] can keep typing even when they have taken off their hands phrase after phrase without an additional calibration step.”

The science behind this, is using algorithms to figure out what users are going to type by constantly adjusting the invisible keyboard to fit what the users have in mind, that instead of relying on touch or finger precision.

This "magic" somehow managed to perform its job with a staggering 95.8 percent accuracy.

Early at its infancy, the AI and its invisible keyboard are still having a long way to go before becoming a really reliable one.

With further development and better touch interfaces, the developers believe that the I-Keyboard could be improved to become a fully imaginary replacement for physical keyboards. This in turn could be a game changer for UX design.

The researchers wants to make this project open source, where "we make the materials developed in this work public."

This includes the source code for data collection tool, the collected data, the data preprocessing tool, and also the I-Keyboard framework itself.