Twitter has long been a place for hate speech.

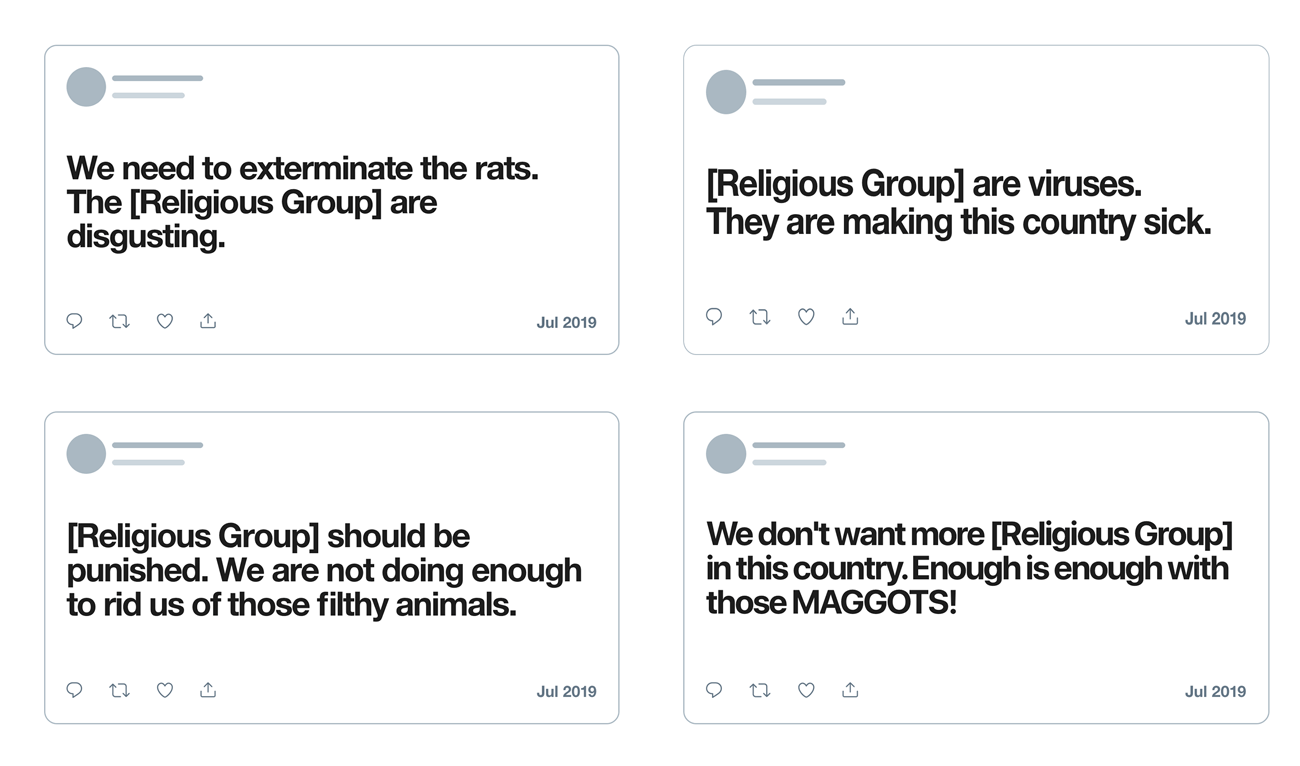

With rising violence against religious groups around the world, Twitter updates its hateful conduct rules to include dehumanizing speech against those groups. This significant change in how the platform moderates against hate speech, won't allow users to dehumanize others using words like "rats", "viruses", "maggots", or other dehumanizing terms.

Following the rules update, Twitter also said that it's requiring tweets that target specific religious groups to be removed as violations of the company’s code of conduct.

Those users won't be banned for posting them, just because they were made before Twitter implemented and communicated the policy.

According to Twitter on a Twitter Safety blog post:

"After months of conversations and feedback from the public, external experts and our own teams, we’re expanding our rules against hateful conduct to include language that dehumanizes others on the basis of religion.”

This Twitter strategy is meant to curb the spread of hate speech, experienced by religious groups on its platform.

So whether it’s white supremacists responsible for the murders of Jewish congregants in Pittsburgh or the massacre in Christchurch, New Zealand, or attacks by Islamic militants like ISIS, Twitter won't allow dehumanizing posts that relate to religion.

The updated rules however, don't focus on political groups, hate groups or other non-marginalized groups with this type of language.

One of the reasons is because public consultations had indicated users still wished to use dehumanizing language to criticize political organizations and hate groups.

Twitter said that it started planning to update its rules after receiving more than 8,000 responses from people located in more than 30 countries around the world. And if the method works, Twitter may extend the reach of this rule to cover some other groups.

Social media has played a big role in disseminating hate speech and radicalizing users. Twitter and other tech companies have struggled to strike a balance between free expression and protecting users from attack.

Too much restriction will annoy users, and will certainly hurt free speech and business. But rules too loose will make the internet a much worse place to be.

Previously, Twitter's hateful conduct policy had already banned users from spreading scaremongering stereotypes about religious groups. The social media also had prohibited the use of imagery that might provoke hatred.

Including religious groups in its consideration is just a step forward to make Twitter less toxic.

Twitter said that it would also respond to user reports for any of such incidents, as well as employing machine-learning tools to automatically flag suspect posts for review by its human moderators. These moderators, according to Twitter, are given more in-depth training process to insure a fair reviewing process.

Similarly and most recently, Facebook's Instagram has taken steps to discourage profanity and bullying on its platform by asking users: "Are you sure you want to post this?", if its algorithm determines a message to be abusive.