Artificial Intelligence powered by deep learning have proven itself capable of creating something out of nothing.

One of which, was showcased on the website 'This Person Does Not Exist' that is capable of generating startlingly realistic fake faces.

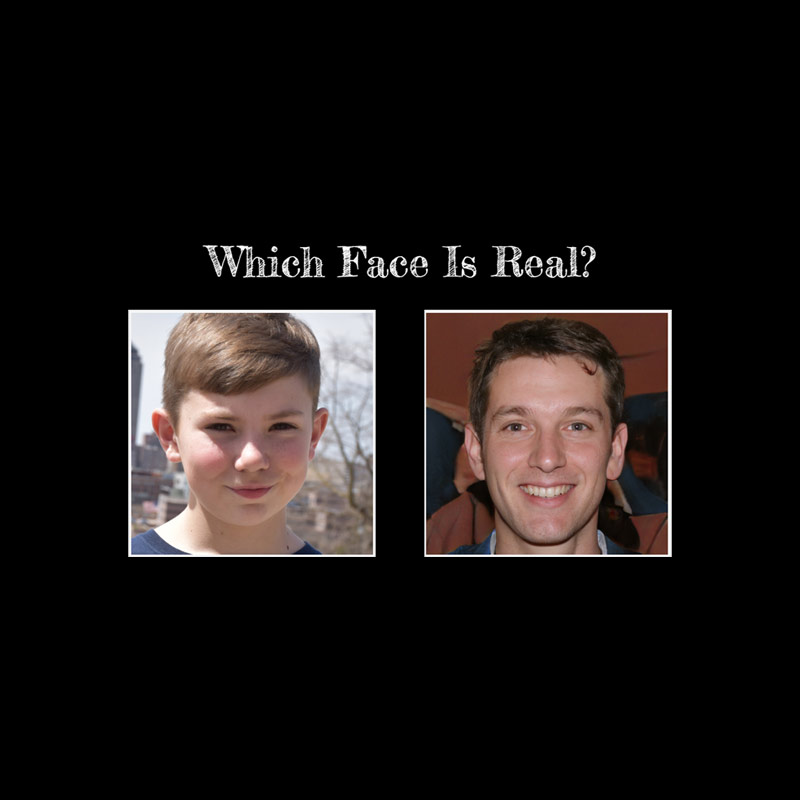

This time, there is another similar site, calls 'Which Face Is Real' (whichfaceisreal.com), that goes a bit further than the former.

The site, while also generating those chilling soulful fake faces, it also allows visitors to test their ability to distinguish AI-generated fakes from the genuine ones.

Developed by Jevin West and Carl Bergstrom at the University of Washington as part of the Calling Bullshit project, the two think that the rise of AI-generated fake faces could trouble the society's trust in evidence.

This is why the two academics want to educate the masses so they can know and realize what AIs can do, and in terms of fake faces, people can learn how to differentiate them from real ones better.

Bergstrom and West noted that malicious use of these fakes can spread misinformation.

For example, AI could be used to generate a fake culprit that’s circulated online, spread on social networks.

In these scenarios, journalists usually try to verify the source of an image, using tools like Google’s Reverse Image search. But that attempt wouldn’t work on an AI fake.

“If you wanted to inject misinformation into a situation like that, if you post a picture of the perpetrator and it’s someone else it’ll get corrected very quickly,” says Bergstrom. “But if you use a picture of someone that doesn’t exist at all? Think of the difficulty of tracking that down.”

"When a new technology like this comes along, the most dangerous period is when the technology is out there but the public isn’t aware of it," said Bergstrom. "That’s when it can be used most effectively."

"So what we’re trying to do is educate the public, make people aware that this technology is out there,” continued West. "Just like eventually most people were made aware that you can Photoshop an image."

On the 'Which Face Is Real' website, the two academics present pairs of images: a real one from the FFHQ collection, and a synthetic one, as generated by the StyleGAN system and posted to thispersondoesnotexist.com, which posts a new artificial image every 2 seconds.

Both thispersondoesnotexist.com and whichfaceisreal.com use a machine learning method with generative adversarial network (GAN) to generate their fake faces.

These networks operate by learning the traits of real people's faces by feeding on a huge database. The AIs then learn from the patterns, to then try to replicate the faces they've seen, in their own ways.

GANs here is one of the best way to train AIs because it involves more than one learning agent.

In this case, the site uses photorealistic face generation software StyleGAN. which was open-sourced by graphics hardware manufacturer Nvidia.

The first agent in the GAN is the 'generator, trained to create a new human face from scratch. The other agent is the 'discriminator' set to judge the generator, by trying to determine if the image the generator produces is a real image or a fake one.

With the agents pitted against each other, the AI is finally capable of generating highly realistic images in shockingly high resolution.

Using this technique, researchers can make AIs to learn just about anything, including how to manipulate audio and video. Although there are limitations to what such systems can do, researchers and scientists in the AI field continue to steadily improve the technology.

For example, when deepfakes was created to turn people's fantasies to the screen, people have then developed the technology to create deepfake dancing, to even create ‘Deep Video Portraits’ which extents deepfakes to a whole new degree.

There were also initiatives to prevent deepfakes from plaguing real data.

For example, DARPA created tools to automatically spot deepfakes and other AI-made fakeries, as well as an attempt to protect videos from tampering using blockchain technology.

But for people who want to learn how to differentiate deepfakes by themselves, according to West, "it’s actually quite easy to do."

For example, AI-generated fakes can have asymmetrical faces, misaligned teeth, unrealistic hair, and ears.

"But these fakes will get better. In another three years [these fakes] will be indistinguishable,” continued West.

And when that happens, knowing how to differentiate them would be half the battle. "Our message is very much not that people should not believe in anything. Our message is the opposite: it’s don’t be credulous," said Bergstrom.