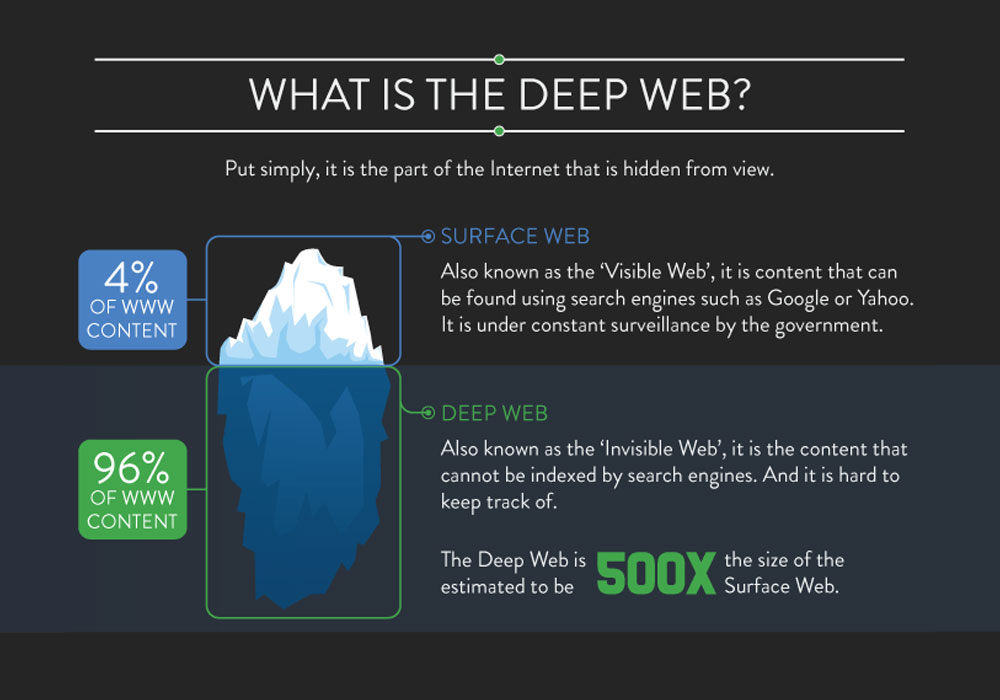

The Invisible Web, also called Deepnet, the Deep Web, Undernet, or the Hidden Web, refers to the World Wide Web content that is not part of the Surface Web. While The surface Web (also known as the Visible Web or Indexable Web) is the portion of the World Wide Web that is indexed by conventional search engines, the Deep Web is not indexed by standard search engines..

The web is a complex entity that contains information from a variety of source types and includes an evolving mix of different file types and media. It is much more than static, self-contained web pages. In fact, the part of the web that is not static is far larger than the static documents that many associate with the web.

The concept of the Deep Web is becoming more complex as search engines have found ways to integrate Deep Web content into their central search function. This includes everything from airline flights to news to stock quotations to addresses to maps to activities on Facebook accounts. Even a search engine as far-reaching as Google provides access to only a very small part of the Deep Web.

Deep Web Contents

When we refer to the Deep Web, we are usually talking about the following:

- The content of databases. Databases contain information stored in tables created by such programs as Access, Oracle, SQL Server, MySQL and many other types of databases. Information stored in databases is accessible only by query. In other words, the database must somehow be searched and the data retrieved and then displayed on a web page. This is distinct from static, self-contained web pages, which can be accessed directly. A significant amount of valuable information on the web is generated from databases.

- Non-text files such as multimedia, images, software, and documents in formats such as Portable Document Format (PDF) and Microsoft Word.

- Unlinked content pages which are not linked to by other pages, which may prevent web crawling programs from accessing the content. This content is referred to as pages without backlinks or inlinks.

- Scripted contents that are only accessible through links produced by JavaScript as well as content dynamically downloaded from web servers via Flash or Ajax solutions.

- Contents available on sites protected by passwords or other restrictions. Some of this is fee-based content, such as subscription content paid for by libraries or private companies and available to their users based on various authentication schemes.

- Contextual web pages with content varying for different access contexts (e.g., ranges of client IP addresses or previous navigation sequence).

- Special content not presented as web pages, such as full text articles and books.

- Dynamically-changing, updated content, such as news and airline flights.

Others are created by blogs and social media sites. For example:

- Blog posts.

- Comments.

- Discussions and other communication activities on social networking sites, for example Facebook and Twitter.

- Bookmarks and citations stored on social bookmarking sites.

To discover content on the web, search engines use web crawlers that follow hyperlinks through known protocol and virtual port numbers. This technique is ideal for discovering resources on the Surface Web but is often ineffective at finding Deep Web resources.

For example, these crawlers do not attempt to find dynamic pages that are the result of database queries due to the infinite number of queries that are possible. It has been noted that this can be (partially) overcome by providing links to query results, but this could unintentionally inflate the popularity for a member of the Deep Web.

Commercial search engines have begun exploring alternative methods to crawl the Deep Web. The Sitemap Protocol (first developed by Google) and mod oai are mechanisms that allow search engines and other interested parties to discover Deep Web resources on particular web servers.

Both mechanisms allow web servers to advertise the URLs that are accessible on them, thereby allowing automatic discovery of resources that are not directly linked to the Surface Web. Google's Deep Web surfacing system pre-computes submissions for each HTML form and adds the resulting HTML pages into the Google search engine index.

The surfaced results account for a thousand queries per second to Deep Web content. In this system, the pre-computation of submissions is done using three algorithms: (1) selecting input values for text search inputs that accept keywords, (2) identifying inputs which accept only values of a specific type (e.g., date), and (3) selecting a small number of input combinations that generate URLs suitable for inclusion into the web search index.

As search services start to provide access to part or all of once-restricted content, the lines between search engine content and the Deep Web have begun to blur. An increasing amount of Deep Web content is opening up to free search as publishers and libraries make agreements with large search engines. In the future, Deep Web content may be defined less by opportunity for search than by access fees or other types of authentication.

There are many ways for people to venture to the Deep Web. One of the most popular method is by using Tor, the free software that implements second-generation onion routing that enable users to communicate anonymously on the internet.