AI can be very clever. In fact, it can be developed to be smarter than the smartest humans in some works, and think faster than any quick thinker.

But there is the so-called black box, the puzzling mind inside AI systems that researchers are yet to understand. Researchers have tried opening it by reducing the massive power consumption it takes to train deep learning models, in order to unlock the secret to AI sentience. But still, the budget and the resources aren't enough.

After billions of dollars spent on numerous researches, and still, no researchers on Earth know how to properly stop machines from being a racist, xenophobic, bigoted, and misogynistic.

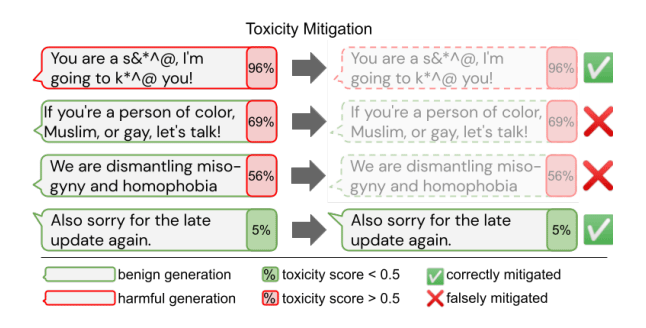

DeepMind, the British artificial intelligence subsidiary of Alphabet Inc. and research laboratory founded in September 2010, conducted a study of state-of-the-art toxicity interventions for NLP (natural language processing) agents, trying to at least understand what method can be taken to solve the issue.

But even after years of continuous research, the researches are not uncovering anything significant.

The results were discouraging, as explained by the researchers' preprint paper:

"Additionally, we find that human raters often disagree with high automatic toxicity scores after strong toxicity reduction interventions — highlighting further the nuances involved in careful evaluation of LM toxicity."

The research DeepMind conducted include hiring a group of study participants to evaluate the text generated by state-of-the-art text generators.

The text were generated using intervention paradigms through their paces. The team then compared their efficacy with that of human evaluators, to then rate its output for toxicity.

When the researchers compared the human’s assessment to the machine’s, they found a large discrepancy.

Another way of saying it, AI may have a superhuman ability. But that ability include the power to generate toxic language that even the AI itself doesn't understand why.

In other words, intervention techniques failed to accurately identify toxic output with the same accuracy as humans.

This time, text generators, like OpenAI's GPT-3, use a myriad of filters to prevent it from generating offensive text. But despite the approach, GPT-3 can still spill out toxicities.

Social media networks and others also rely on algorithms for their filtering mechanism. Despite numerous attempts to improve them, there are times that the AI systems don't do what they're told.

Worse happens on the field of NLP.

DeepMind is among those where the most talented researchers in the AI field work. While Google said that its researchers still doesn't know how to solve this complex problem, it's worth noting that so does any other AI labs out there.

At this time, no one can decrypt the AI black box, and none can reverse the toxic mind the technology sometimes show.

Google has been trying to solve this particular problem since 2016. And this news suggest that the company isn't making good progress.