Facebook has its own share of controversies, and it's trying to cut the numbers down.

As the largest social media network, Facebook has the most number of users. With that many users, anything from good contents to bad contents can be shared, before Facebook can even notice. Since Facebook has massive influence, this is a problem that needs to be solved.

To help it reduce the reach of sensationalist and provocative contents, Facebook is taking some steps in updating its algorithms to demote those clickbait posts.

This was announced in a blog post written by founder and CEO Mark Zuckerberg in which he details the ways Facebook is dealing with problematic content going forward.

Zuckerberg refers to clickbait content as "borderline." It doesn’t really break Facebook’s rules, but it does lead to a poor user experience.

"The single most important improvement in enforcing our policies is using artificial intelligence to proactively report potentially problematic content to our team of reviewers, and in some cases to take action on the content automatically as well."

Facebook is able to pinpoint clickbaits down to individual posts, but there are questions like:

- How to balance the ideal of giving everyone a voice with the realities of keeping people safe and bringing people together?

- What should be the limits to what people can express?

- What content should be distributed and what should be blocked?

- Who should decide these policies and make enforcement decisions?

- Who should hold those people accountable?

There isn't a broad agreement on the right approach, because people coming from different countries may have very different conclusion on what are acceptable and what aren't. Then there are cultural norms that also vary in different country, and shifting rapidly.

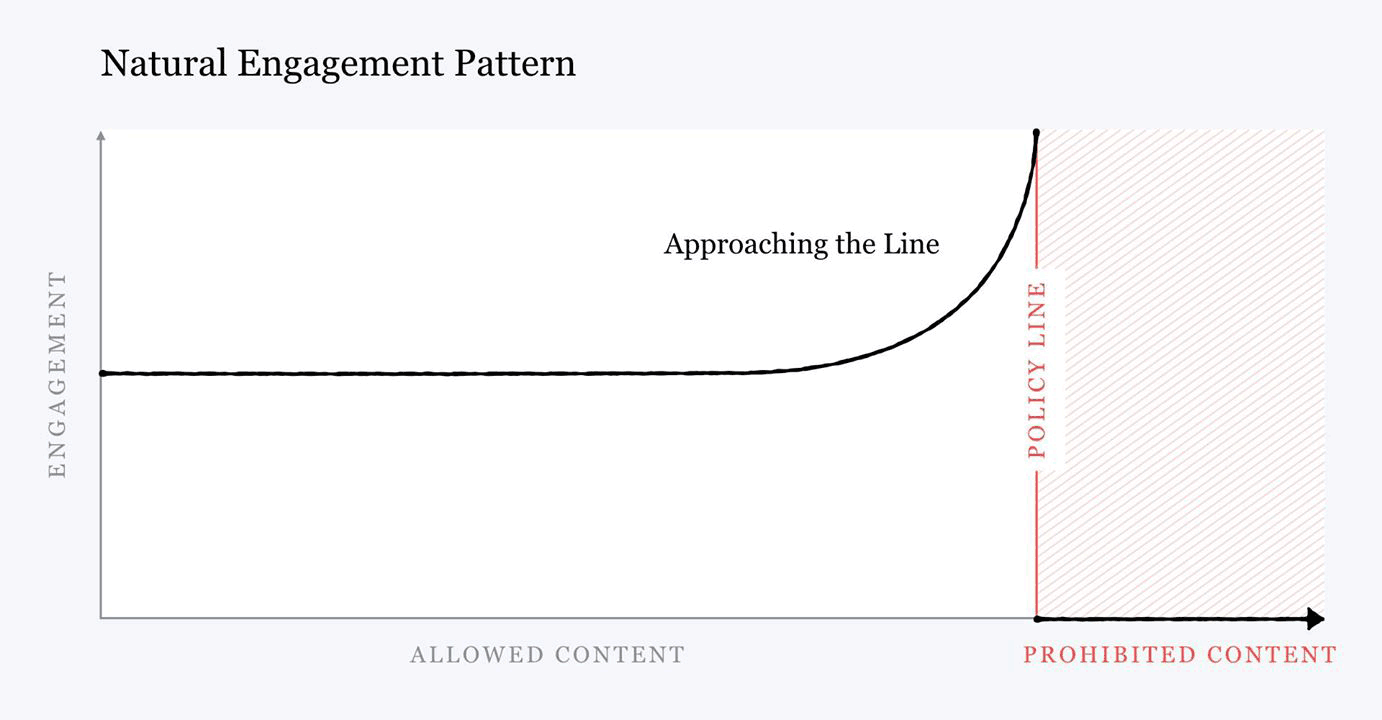

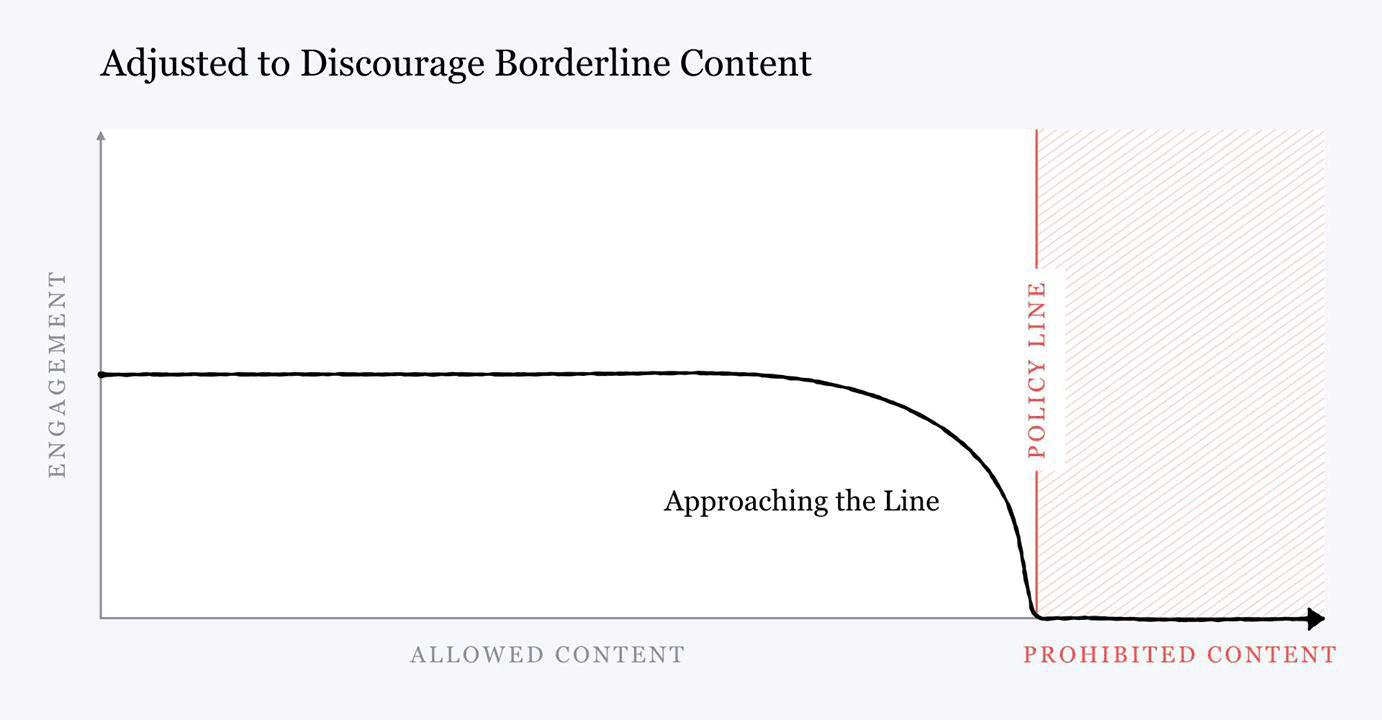

To manage these issues, Facebook addresses them by penalizing borderline contents so they get less distribution and engagement. By making the distribution to curve like the graph shown below, the declining distribution when contents get sensational, people will be disincentivized from creating provocative content that is as close to the line as possible.

Facebook relies heavily on AI systems that can proactively identify potentially harmful content so the social media can act on it more quickly.

"While I expect this technology to improve significantly, it will never be finished or perfect," continued Zuckerberg.

For this reason, the fundamental question is how Facebook can ensure that its systems are not biased in ways that treat people unfairly. This is why Facebook is also rolling out content appeals process.

"We started by allowing you to appeal decisions that resulted in your content being taken down. Next we're working to expand this so you can appeal any decision on a report you filed as well. We're also working to provide more transparency into how policies were either violated or not."

In practice, this appealing process should help Facebook correct a significant number of errors its AI makes, as the company continues to improve its accuracy over time.

What Zuckerberg is really saying here is that, Facebook determined that reducing the reach of borderline content is the best solution, rather than changing the rules on what is allowed to be posted.