Machine learning has fundamentally changed how humans interact with technologies.

With AIs, humans can develop machine learning capable of recognizing complex images, speaking like humans, drive cars, helping the development of new medicines, detecting disease, and many many more. Unfortunately, the process to make that is burdening.

While machine learning technology can do some things automatically, its training processes still require a lot of human input.

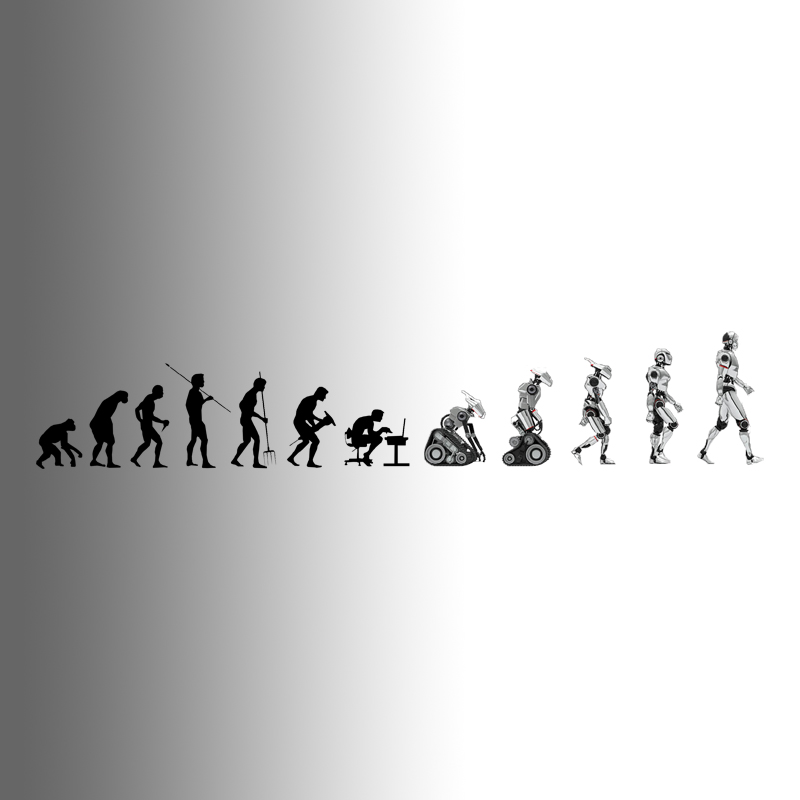

For many years, researchers at Google have been working on machine learning technology known as AutoML (Automatic Machine Learning system). This time, the researchers have 'evolved' the technology, 'Darwinian-style'.

Here, the researchers have shown that they can create AI programs that can continue to improve upon themselves, with very minimal human intervention, if any.

Called 'AutoML-Zero', the system can even improve faster than they would if humans were doing the coding.

in a preprint paper, the team said that:

"It is possible today to automatically discover complete machine learning algorithms just using basic mathematical operations as building blocks. We demonstrate this by introducing a novel framework that significantly reduces human bias through a generic search space."

The original AutoML system is intended to make it easier for apps to leverage machine learning. While the system already includes plenty of automated features, it still requires human intervention to some degree. AutoML-Zero takes that required amount of human input way down.

Using a simple three-step process: setup, predict and learn, AutoML-Zero can create machine learning from scratch.

To make this happen, the system starts with a selection of 100 candidate algorithms, made by randomly combining simple mathematical operations.

Then, in a sophisticated trial-and-error process, the system identifies the best performing algorithm for a certain task to be the "parent", which are then retained and tweaked, for another round of trials.

In other words, AutoML-Zero 'mutates' as the process continues.

And when the final code is created, it is tested on the tasks the AI was meant for. After acquiring the result, the best-performing algorithms are kept for future iteration.

"This parent is then copied and mutated to produce a child algorithm that is added to the population, while the oldest algorithm in the population is removed," the paper states.

This is similar to the concept of "survival of the fittest", the phrase that originated from Darwinian evolutionary theory as a way of describing the mechanism of natural selection.

What makes it even more intriguing, the researchers said that it's possible for AutoML-Zero to scan 10,000 possible algorithms per second per processor, with the possibility to scale. What this means, AutoML-Zero is also fast.

Eventually, this kind of technology can help the development of AI to a whole new level.

For example, It would allow developers with less to no AI expertise to create complex agents. It may even help researchers eradicate human biases from AI, simply because humans are barely involved in the training process.

And if computer scientists can scale up this kind of automated machine-learning to accomplish increasingly complex tasks, the technology could open a new era of machine learning, where systems are designed by machines instead of humans. This would likely make it cheaper to get the benefits of deep learning, while also leading to the creation of novel solutions to tackle real-world problems.

Initially, the project that has been made open-source on GitHub, is only capable of producing simple AI systems. But the researchers think the complexity can be scaled up rather rapidly.

"Starting from empty component functions and using only basic mathematical operations, we evolved linear regressors, neural networks, gradient descent... multiplicative interactions. These results are promising, but there is still much work to be done," the scientists' the researchers noted on their preprint paper.