Google has released an image compression technology called RAISR (Rapid and Accurate Super Image Resolution). What it does is to save precious data without sacrificing image details and quality.

Each photos and images have their own stories, and according to Google, they deserve to be viewed at the best possible resolution. However, high resolution images are large in size, making them use a lot of bandwidth, leading to slower loading speed and higher data cost.

For people where the internet is not that fast or pricey, this can pose a problem.

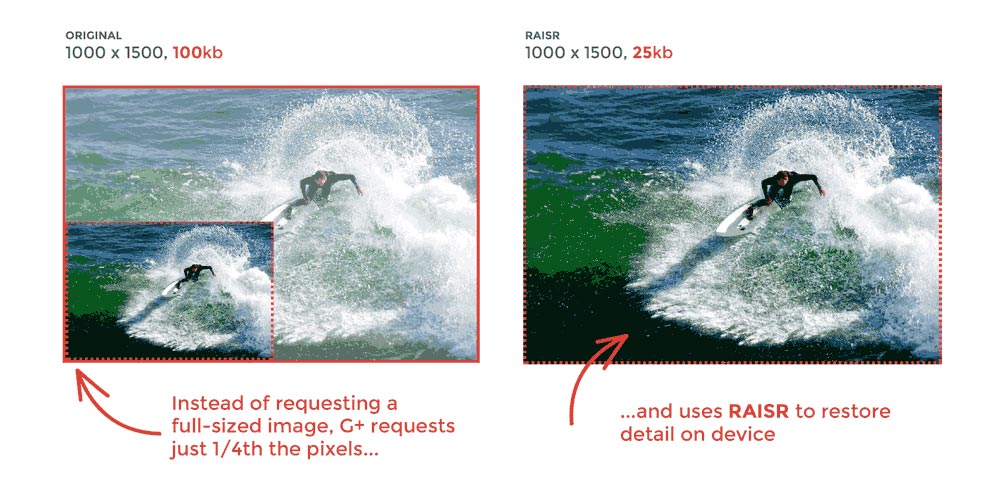

To address this issue, Google is using RAISR to save up to 75 bandwidth. To achieve such feat, the technology uses machine learning to analyze both low and high-quality versions of the same image. After the image has been analyzed, it learns the things that make the high-quality image superior, and simulates the differences on the smaller version.

Google is implementing the technology to Google+ according to its blog post on January 11th, 2017. The company claims to be scaling over a billion images a week, reducing users' total bandwidth by about a third. While the technology is initially for Google+, the company is planning to take the technology out more broadly.

With the internet growing, people has been using the web to share and store millions of photos and images everyday. However, many of those images are either limited in resolution of the device used to take the picture, or purposely have their quality lowered in order to make them suitable for mobile phones or to those people with slower internet connection.

But as the internet is becoming more speedier in general and mobile devices having better displays, the demand for high-quality images or low-resolution images is increasing.

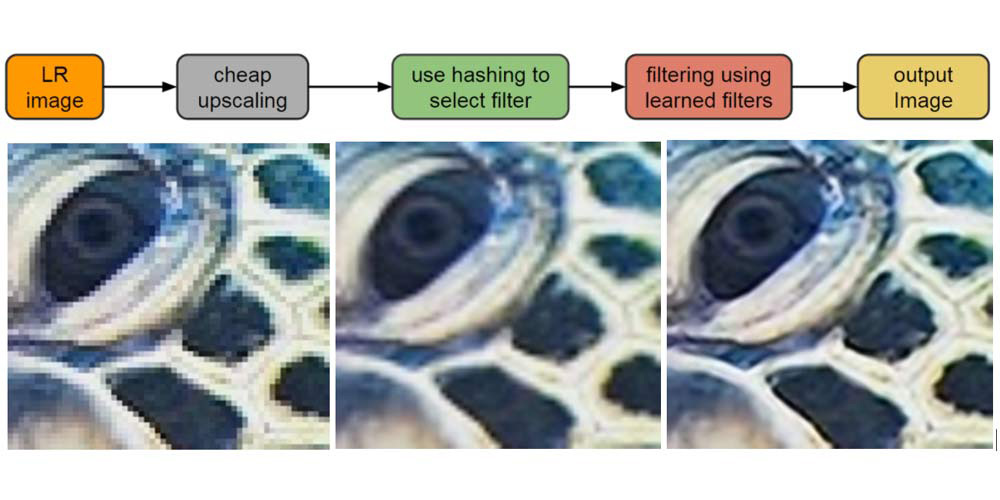

RAISR was first introduced by Google on its Research Blog on November 14, 2016. It uses a technique that incorporates machine learning to create images that are smaller in sizes, but comparable to their higher-quality counterparts. The method is called upsampling.

But instead of making upsampled images look blurry, RAISR's machine learning compares pairs of images, one low quality, one high, to find filters that, when applied to selectively to each pixel of the low-resolution image, will recreate details that are of comparable quality to the original.

The technology can be trained in two ways: first is the direct method where filters are learned directly from low and high-resolution image pairs, and the second is by first applying a computationally cheap upsampler to the low resolution image and then learning the filters from the upsampled and high resolution image pairs.

For either method, RAISR filters are trained according to edge features found in small patches of images. It compromise brightness/color gradients, flat/textured regions, etc.. Each of them are characterized by the angle of an edge, strength, sharp edges have a greater strength and coherence.

In practice, RAISR selects and applies the most relevant filter from the list of learned filters to each pixel neighborhood in the low-resolution image. When the filters are then applied to the lower quality image, they are able to recreate details that are comparable in quality to the original high resolution, with an improvement to linear, bicubic, or Lanczos interpolation methods.

To accomplish the ability, RAISR's filters have learned from a database of 10,000 high and low-resolution image pairs, with an hour worth of training process.

As a result, the technology has the ability to advance imaging technology in general. From improving digital pinch to zoom to capturing, saving, or transmitting images at lower resolution and super-resolve on demand without any visible degradation in quality. All that while using less mobile data and storage plans.