Accidents are accidents. They're unfortunate incidents that happen unexpectedly and unintentionally, and may result in damage, injury or even death. If they were avoidable they won't be called "accidents."

While people are still taking most control in the society we're living in, tech and computers' machine intelligence are supporting or even entirely taking over ever more complex human activities at an ever increasing pace.

One of such activities is driving.

Self-driving cars or autonomous cars are those vehicles that are capable of sensing its environment and navigating without human input. Autonomous cars can detect surroundings using a variety of techniques such as radar, lidar, GPS, odometry, as well as computer vision.

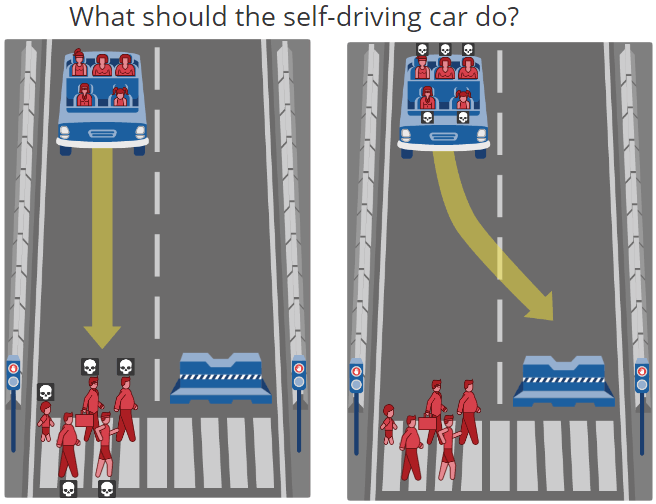

But accidents happen and will happen. As more and more companies experiment with self-driving cars, new questions arise about how these vehicles should respond in certain situations. If someone runs into the middle of the street, for example. Should the car avoid the collision but kill the driver or keep going and run over the pedestrian? This is an example of a moral dilemma.

If there will be an unavoidable accident in an ordinary car, the outcome depends on the driver's driving skills, knowledge of the car and also reflexes among others. But if an accident is going to happen on an autonomous car and someone will certainly be killed, who should die?

To answer this, researchers at Massachusetts Institute of Technology launched the "Moral Machine". Available on the MIT's website, the researchers are asking people simple questions but with difficult answers.

"The greater autonomy given machine intelligence in these roles can result in situations where they have to make autonomous choices involving human life and limb. This calls for not just a clearer understanding of how humans make such choices, but also a clearer understanding of how humans perceive machine intelligence making such choices."

MIT's Moral Machine wants to gather information about people's thoughts by putting them into the shoes of programmers that are responsible in training autonomous cars to deal with such scenarios.

"You will be presented with random moral dilemmas that a machine is facing. For example, a self-driving car, which does not need to have passengers in it. The car can sense the presence and approximate identification of pedestrians on the road ahead of it, as well as of any passengers who may be in the car."

In the simulation by the Moral Machine, in case of a brake failure, there are questions whether human's life is more valuable than animals. Or whether you run down two criminals and two innocent people instead of killing the people inside the autonomous car tat are all innocent. There are also questions that ask whether elderly are worth less than young, or is a female life is worth more than a male's.

To answer this question, there is no correct answer. But what the researchers at MIT wants, is to know how humans really feel, and how they want an autonomous car to respond to those once-in-several-lifetimes occurrences.

"As an outside observer, you judge which outcome you think is more acceptable," said the website.

Autonomous self-driving cars are certainly the future. As humans started to replace human power with computers, the more the development of technology are made to make computers do what humans can do.

Autonomous cars are equipped with an array of sensors that enable them to "see" where they are and "understand" the environment they're in. The human driver's senses are replaced by them. Autonomous cars think like computers with bits and bytes, logically and faster than what a human brain can, making it able to know what is going to happen and decide what it is going to do before it happens. So any judgement should be programmable.

Unfortunately, computers in autonomous cars should be responsible in what they're doing, and that is an issue humans have to struggle with. While cars may have made the best decision based on the data their sensors provide, to humans, cars can always be machines that are capable of killing without human input.

And that is certainly an issue and something that technology can't really solve.