No streaming media service on the internet has more influence than YouTube. With an active growing community, it's certainly difficult to police everything.

Here, YouTube has announced an expansion of its policies around user harassment, which covers and includes "veiled or implied" threats, insults based on race, sexual orientation or gender and repeated attacks over time.

The update comes after many YouTubers called for more action over such behavior, which have long been crossing the line.

According to YouTube on its official blog post announcement:

"Today we are announcing a series of policy and product changes that update how we tackle harassment on YouTube. We systematically review all our policies to make sure the line between what we remove and what we allow is drawn in the right place, and recognized earlier this year that for harassment, there is more we can do to protect our creators and community."

In details, YouTube's harassment policy:

- Prohibit veiled or implied threats, including content simulating violence toward an individual, and/or language suggesting physical violence may occur.

- Take action against content that maliciously insults someone based on protected attributes, such as their race, gender expression, or sexual orientation.

- Implement restrictions on accounts which repeatedly engage in harassing behavior which comes close to violating its harassment policy. YouTube may suspend from from YouTube Partner Program, and eliminating their ability to make money on YouTube. YouTube may also remove their content if they repeatedly harass someone, and may take more severe action including issuing strikes or terminating a channel altogether.

In addition to these changes, YouTube is also implementing more stringent restrictions on comments, while also rolling out a new comment filter tool to some of its larger profiles.

"When we're not sure a comment violates our policies, but it seems potentially inappropriate, we give creators the option to review it before it's posted on their channel. Results among early adopters were promising - channels that enabled the feature saw a 75% reduction in user flags on comments. Earlier this year, we began to turn this setting on by default for most creators," said YouTube.

What this means, content creators should see more toxic comments highlighted for their manual review.

And at the same time, YouTube also puts a call to creators to have their words about the COPPA regulations, which relate to contents aimed at children.

According to YouTube, while the company supports the increased COPPA regulation to protect its younger users, the available guidelines have made it difficult for some YouTube content creators to understand what is and is not acceptable.

"We strongly support COPPA’s goal of providing robust protections for kids and their privacy. We also believe COPPA would benefit from updates and clarifications that better reflect how kids and families use technology today, while still allowing access to a wide range of content that helps them learn, grow and explore," explained YouTube.

With the changes, YouTube hopes that it can still be a place where people can express their broad range of ideas, while representing a step towards making sure that it can protect the community as a whole.

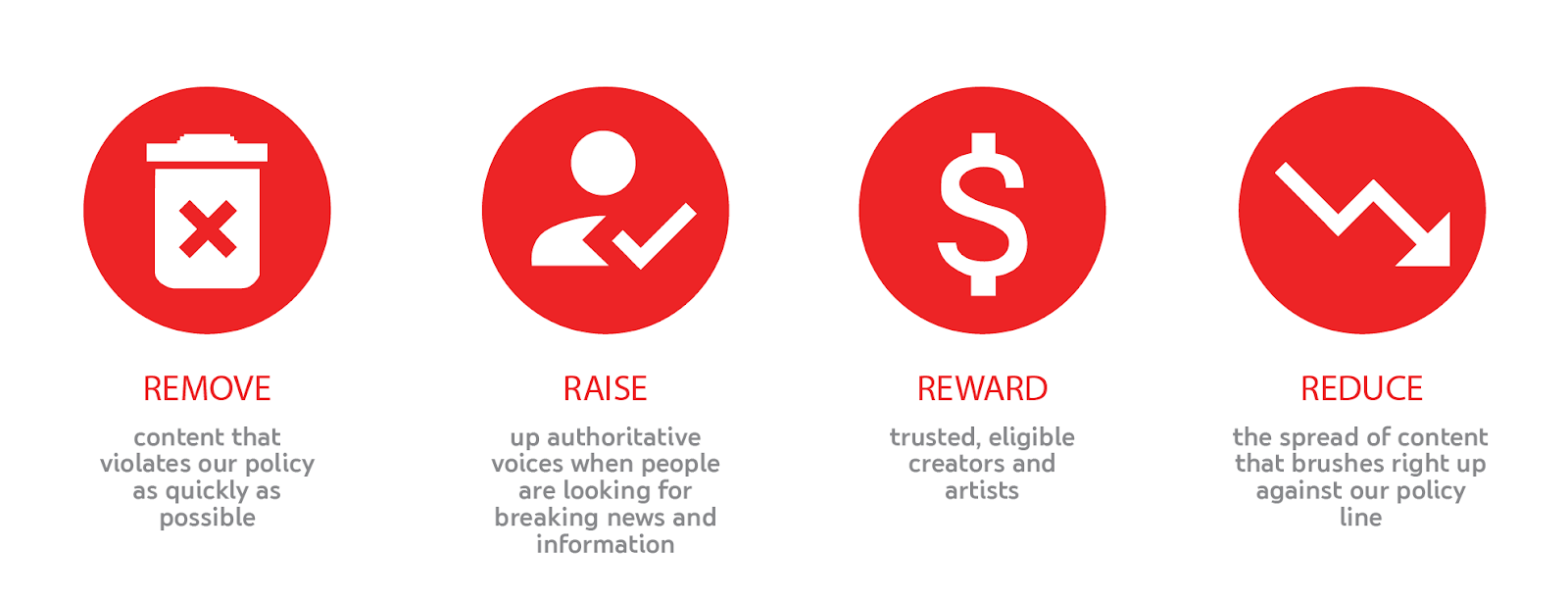

Previously, YouTube's CEO Susan Wojcicki highlighted how to to defend YouTube's openness using what she calls 'The 'Four R's''.