AI is only as good as the data it learned from. In theory, the more the data the better. In other words, more is better than less.

OpenAI's has introduced its 'overhyped' GPT-3. With a capacity of 175 billion machine learning parameters, it is more than 100 times larger than the less capable, but still smart GPT-2.

Simply put, GPT-3 is a monster of an AI system.

It can be used to train AIs capable of responding to almost any text prompt with unique and original responses.

But numbers are only numbers, as two researchers have proven.

Timo Schick and Hinrich Schutze, two AI researchers from the Ludwig Maximilian University (LMU) of Munich, have developed an AI-powered text generator that is capable of beating OpenAI‘s state-of-the-art GPT-3 in a benchmark test.

What makes it unique is that, the text generator can be considered bite-sized to GPT-3. The researchers created the AI using only a tiny fraction of GPT-3's parameters, or to be exact, 223 million parameters.

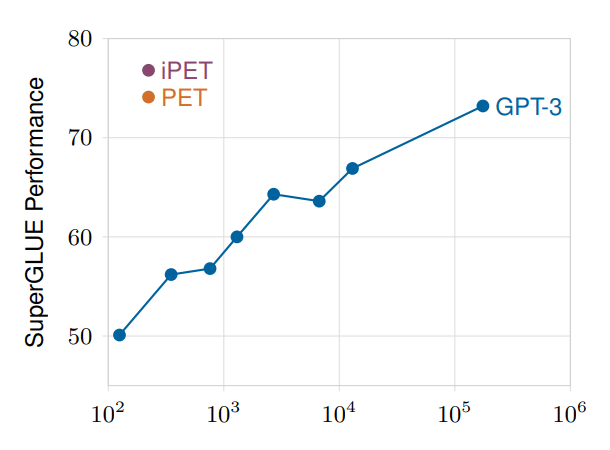

According to a researchers' pre-print paper on arXiv, the AI outperforms GPT-3 on the SuperGLUE benchmark test.

"This is achieved by converting textual inputs into cloze questions that contain some form of task description, combined with gradient-based optimization; additionally exploiting unlabeled data gives further improvements."

The researchers managed to train their model using a method called pattern-exploiting training (PET), which is a method that reformulates tasks as cloze questions and trains an ensemble of models for different reformulations.

The researchers then combined PET with a small pre-trained ALBERT model.

This allowed them to create an AI system capable of outperforming GPT-3 on SuperGLUE with 32 training examples, while requiring only 0.1% of its parameters.

Parameters are variables used to tune and tweak AI models.

In theory, the larger the parameters, the more data an AI can learn from. This is believed to translate to smarter and more capable AIs.

Seeing how a system using 0.1% parameters can beat a system using a 100% of its parameters in a benchmark test is indeed remarkable. But there is one big drawback.

For example, the AI system won't be capable of outperforming GPT-3 in every task. This is because the system was trained with less training materials, meaning that it should be less capable in a broader usage.

But it does open new ways for new and other researchers who want to venture to AI using more modest hardware.

Back in 2019, researchers found that training a common big AI model can use as much carbon as five cars in their lifetimes. What this means, training AI requires a huge amount of resources. The process takes time, and also expensive.

With this approach by the two researchers from the Ludwig Maximilian University, people can have a greener way of training AI, which involves less carbon footprint that hurts the Earth.

Following their research, Timo Schick and Hinrich Schutze proposed "a simple yet effective modification to PET" that enables AI systems to predict multiple tokens.

And to enable comparisons with their work, the two have made their dataset of few-shot training examples publicly available.