With the help of AIs, computers can go beyond their original programming, to awe even their developers.

As hardware advances, and so does the software and the algorithms, AIs have become significantly smarter, that deepfakes that once started on Reddit, has become the hype, and also the benchmark of AI-created fakery.

At first, it's kind of easy for the keen eyes to spot deepfakes, and tell them apart from the real ones, because AI-made flaws are certain to happen.

But sometimes, it can be better to leave the hard job to AIs.

Pitting AIs with AIs, Intel has another weaponry at its arsenal.

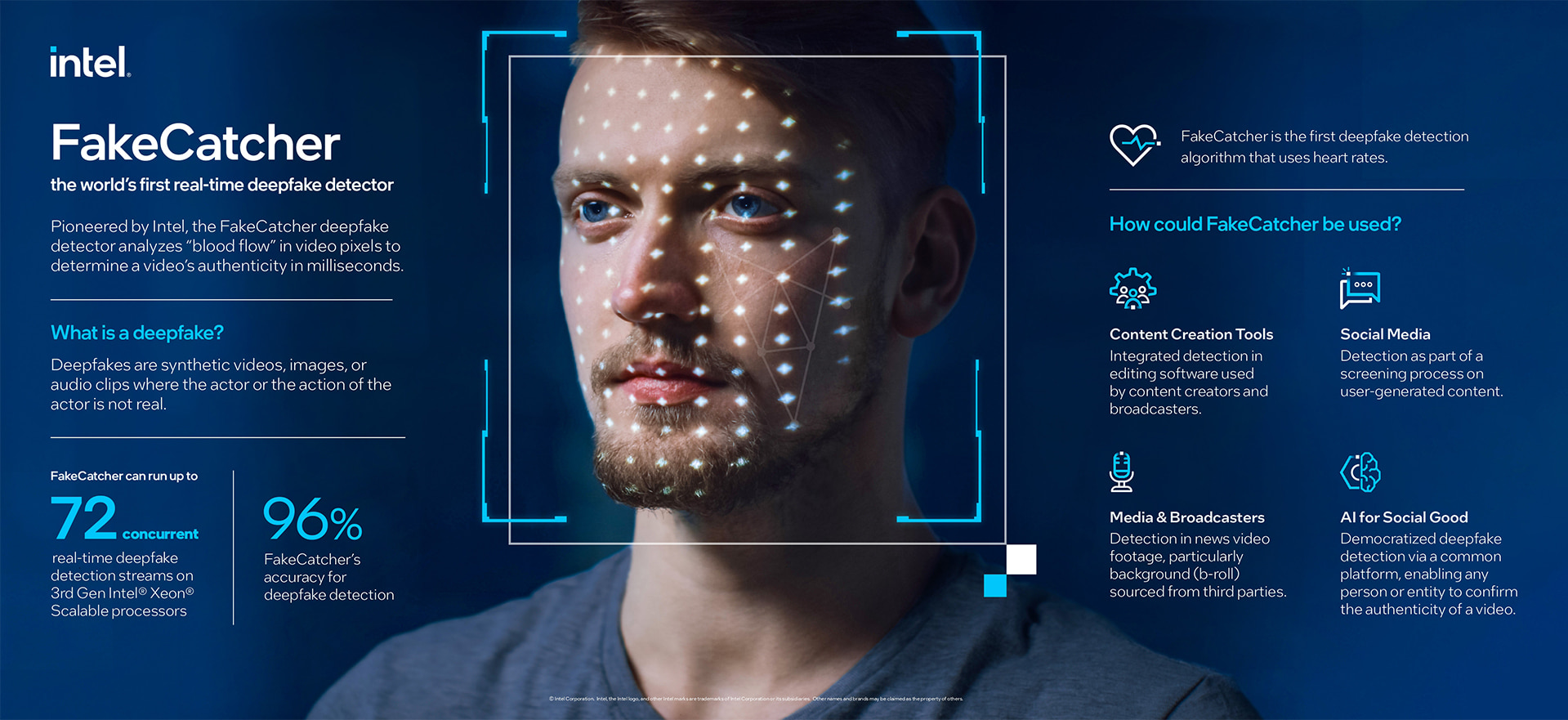

Calling it the 'FakeChecker', the AI from the tech giant is able to spot deepfakes with an astonishing 96% accuracy, in real time.

Thanks to AIs, deepfakes have littered the internet with fakeries of people.

What began as people trying to put celebrities onto porn stars' bodies, became a way to create revenge porn and others that can be more sinister.

Making things worse, deepfakes can also create scarily accurate impressions of politicians and world leaders doing and saying things they haven't.

With the ever-growing amount of images and videos of people that are uploaded to the internet, it's fairly easy to create deepfakes of people.

The more famous the person is, the easier it is to impersonate them with AI.

FakeCatcher from Intel tries to catch deepfakes in a way that has never been done before, and that is by "assessing what makes us human— subtle 'blood flow' in the pixels of a video," according to its press release.

"When our hearts pump blood, our veins change color. These blood flow signals are collected from all over the face and algorithms translate these signals into spatiotemporal maps. Then, using deep learning, we can instantly detect whether a video is real or fake."

In other words, the technology is able to identify the changes in veins color when blood circulates through the body.

Signals of blood flow are then collected from the face and translated by algorithms to discern if a video is real or a deepfake.

The method here is that, a video that doesn't exhibit the subtle cues of blood flow should fail the test, and is exposed as inauthentic.

Previously, methods have been developed to spot deepfakes.

For example, there is one AI that can catch fakeries by asking the subject to turn their face sideways. Then, there is an AI that can find lurking deepfakes by analyzing the eyes, and another one by looking deep at individual pixels

Although many deepfakes are obviously fakes, most people cannot really differentiate deepfakes and real ones, especially when they're shared on social media.

Casual internet users don't take the time to find out if a video is real or fake.

And by the time a deepfake garners millions of shares, it's far too late.

And this AI from Intel, is just another way to spot deepfakes whenever they are present.

With too many deepfakes on the internet, it's becoming increasingly important to have software to help identify deepfakes to avoid harmful consequences.

Intel is trying to help, and it's novel approach is creating a gap that make detectors at least one step ahead.

Read: Deepfakes And Deepfake Detectors: The War Of Concealing And Revealing Faces