For images to be consistent when shown near others, they need to share one common size. For that reason, images should be resized, or cropped. However, there is a problem: how do you know which part to crop?

One good example is when you're taking a selfie.

Do you want people to see your face as the key element in the picture, or the background which let's say has a breathtaking view? In this case, humans can easily know which one to focus on, but for computers, they can have a hard time in doing that.

This happens on Twitter.

In order for consistency, Twitter crops images, but apparently it has been using face detection to focus on the face only.

So here, if you have a background to showoff, tweeting the said image will make Twitter crop with your face as the main point of interest, not the background. And things can even go even weirder, as Twitter explained:

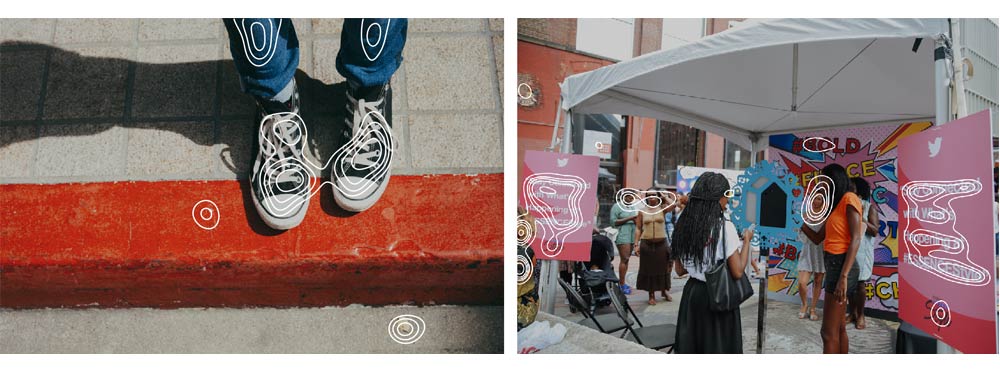

Here, Twitter is improving it. As shown on the image above, the updated algorithm (left) as a new way of cropping by using neural network to predict the most interesting part of an image.

To do this, the system focuses more on 'salient' regions of an image.

Salient means something that is more noticeable or important. In an image, salient region is likely to attract the eyes when looking at it. Academics have learned about to measure saliency using eye trackers, and discovered that people tend to focus on faces, text, animals, as well as objects or parts with high contrast.

"The basic idea is to use these predictions to center a crop around the most interesting region," said Twitter.

Using this information, Twitter trains its AI to predict what people might want to look at, and improved its image cropping process.

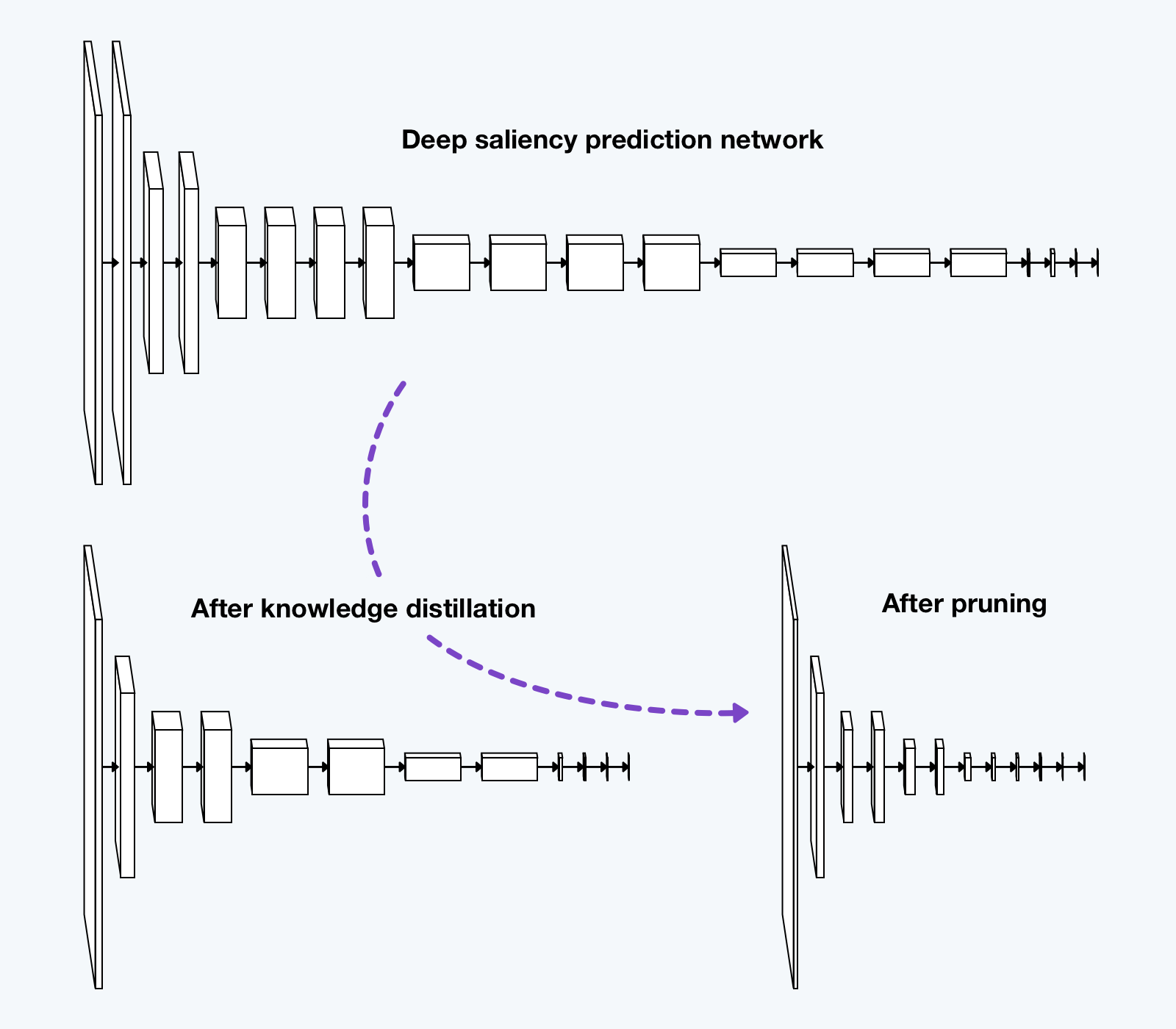

Using the advances in machine learning, predicting saliency has gotten a lot better. However, the neural networks used to predict saliency are too slow to run in production. In order for it work, AI needs to process every single image uploaded to it, sacrificing the ability to crop image in real-time.

However, Twitter said that it doesn't need fine-grained, pixel-level predictions, because its AI is already designed to be interested in roughly knowing where the most salient regions are. In addition to optimizing the neural network’s implementation, Twitter uses two techniques to reduce the size and computational requirements.

First is to use knowledge distillation to train a smaller network to imitate the slower but more powerful network. With this, an ensemble of large networks is used to generate predictions on a set of images. These predictions, together with some third-party saliency data, can then used to train a smaller, faster network.

Through a combination of knowledge distillation and pruning, Twitter should be able reduce the size of saliency prediction by margins.

Second is by developing a pruning technique to "iteratively remove feature maps of the neural network which were costly to compute but did not contribute much to the performance."

To decide which feature maps to prune, Twitter has computed the number of floating point operations required for each feature map, and combined that with an estimate of the performance loss that would be suffered by removing it.

According to Twtitter, these two methods allow it to crop media 10 times faster. The methods also allow Twitter to perform saliency detection on all images as soon as they are uploaded and crop them in real-time.

Twitter is rolling out this feature on Twitter.com, as well as on its iOS and Android app.