At any given moment, people are using the more and more advanced technologies to improve product experience.

In the gaming industry for example, developers are always on the move to make things a bit closer to reality. The goal is to create a digital world that resembles the real world, so real that people can hardly see the difference.

With the many methods available, Nvidia has its own way, and that is by using AI which can turn video into virtual landscape.

At the NeurIPS AI conference in Montreal, the company uses its own DGX-1 supercomputer, powered by Tensor Core GPUs to convert videos captured from a self-driving car’s dash camera.

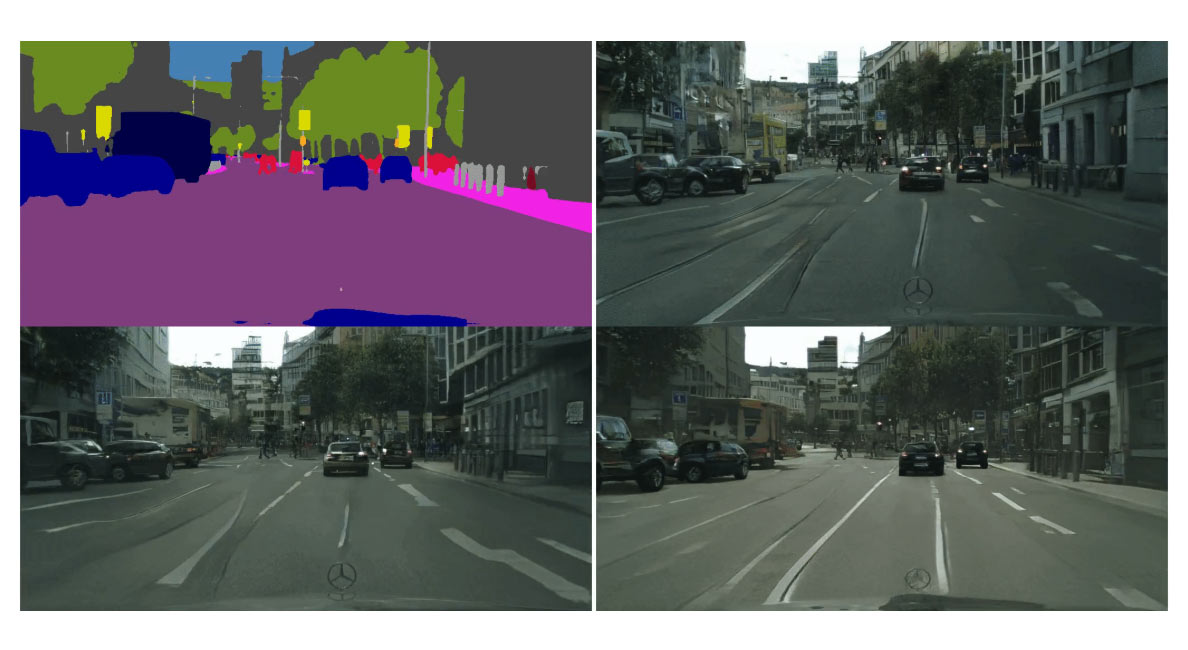

The high-level semantic map generated by the machine is then extracted using a neural network.

Running it through the Unreal Engine 4, a game engine developed by Epic Games, the researchers were able to generate high-level colorized frames.

Nvidia then uses its AI to convert those representations into real images, which end results can be used by developers to ease their work.

"Nvidia has been creating new ways to generate interactive graphics for 25 years – and this is the first time we can do this with a neural network," said Vice President of Applied Deep Learning at Nvidia, Bryan Catanzaro.

"Neural networks – specifically – generative models are going to change the way graphics are created."

And when it comes to Nvidia, a company popular for designing graphics processing units for the gaming and professional markets, this would enable content creators to explore more possibilities by generating new things from standard videos, automatically.

At the time of the announcement, this technology is still under development by Nvidia, and requires a supercomputer, which not many developers have.

In a paper titled Video-to-video synthesis, Nvidia detailed how it uses AI to generate photorealistic video that precisely depicts the content of the source video.

Nvidia said that previous technologies can indeed render photorealistic output. But their resulting videos lacked the temporal coherence. In this case, "the road lane markings and building appearances are inconsistent across frames."

Nvidia's approach also produces photorealistic output which is comparable to others, but with a more temporally consistent video output.

"We can generate 30-second long videos, showing that our approach synthesizes convincing videos with longer lengths," said Nvidia.

To do this. Nvidia created three variants for the AI training process.

The first variant doesn't use the foreground-background colors. The second variant has no conditional video discriminator. And in the last variant, Nvidia removes the optical flow prediction network, and uses human preference score instead.

Nvidia found that the visual quality of output videos degrades significantly without the ablated components.

To evaluate the effectiveness of different components, Nvidia experimented with directly using ground truth flows instead of estimated flows, and found that the results are very similar.

And by using semantic manipulation, Nvidia's approach allows developers to manipulate the semantics of source videos to create the output result.

What Nvidia did here, is presenting a general video-to-video synthesis framework based on conditional GANs.

GAN is a class of artificial intelligence algorithms used in unsupervised machine learning. It uses a system in which two neural networks: the first generates the candidates, and the second evaluates them. The two neural networks are pitted against each other in a zero-sum game framework.

"Through carefully-designed generators and discriminators as well as a spatio-temporal adversarial objective, we can synthesize high-resolution, photorealistic, and temporally consistent videos," said Nvidia.

However, the approach do have some weaknesses. For example, the model struggled when synthesizing turning cars due to insufficient information in label maps. This could be potentially solved by adding additional 3D cues, such as depth maps.

Nvidia's approach also cannot guarantee that an object has a consistent appearance across the whole video. For example, a car may change its color gradually.

But still, this advancement in AI tech follows Nvidia's previous work, and would enable developers and artists create virtual contents in a much lower cost than ever before.