Cloudflare is the popular U.S.-based cloud company that provides content delivery network (CDN) services, distributed denial of service (DDoS) mitigation, internet security and distributed domain name server services.

On the good side, Cloudflare’s cybersecurity services can protect a server from attacks, but on the bad side, that same protection can be used by websites hosting child abuse and revenge porn websites to conceal their true IP address. This makes it hard for investigators to find and remove illegal content on the web.

That according to campaigners from Battling Against Demeaning & Abusive Selfie Sharing (BADASS). They say that Cloudflare makes it easier for its clients to evade detection by “hiding” the locations of the sites sharing the illicit content.

According to L1ght, an Israel-based company working to provide a safe environment to children online, Cloudflare is found to serve its services, including CDN, to websites that host Child Sexual Abuse Material (CSAM).

L1ght estimated that the Cloudflare’s CDN hosts tens to hundreds of such websites.

A Canadian non-profit fighting child sex abuse that also came to investigate found at least three websites sharing CSAM using Cloudflare since 2017. Overall, the records showed that more than 130,000 child-sexual-abuse reports were made from the about 1,800 sites Cloudflare protected.

And as of December 2019, Cloudflare was said to have offered its services to 450 sites with CSAM.

Cloudflare responded to this finding, and said that CSAM is repugnant and illegal, and the company doesn’t tolerate it:

These incidents weren't new for Cloudflare. As a matter of fact, the company has what it calls the 'CSAM Scanning Tool', which is free to use for all Cloudflare users.

This tool allows webmasters/web owners to proactively identify and take action on any CSAM located on their website. The tool should be handy on those websites that allow user-generated content (such as a discussion forum), for example.

The tool "will check all internet properties that have enabled CSAM Scanning for this illegal content," which will then "send a notice to you when it flags CSAM material, block that content from being accessed".

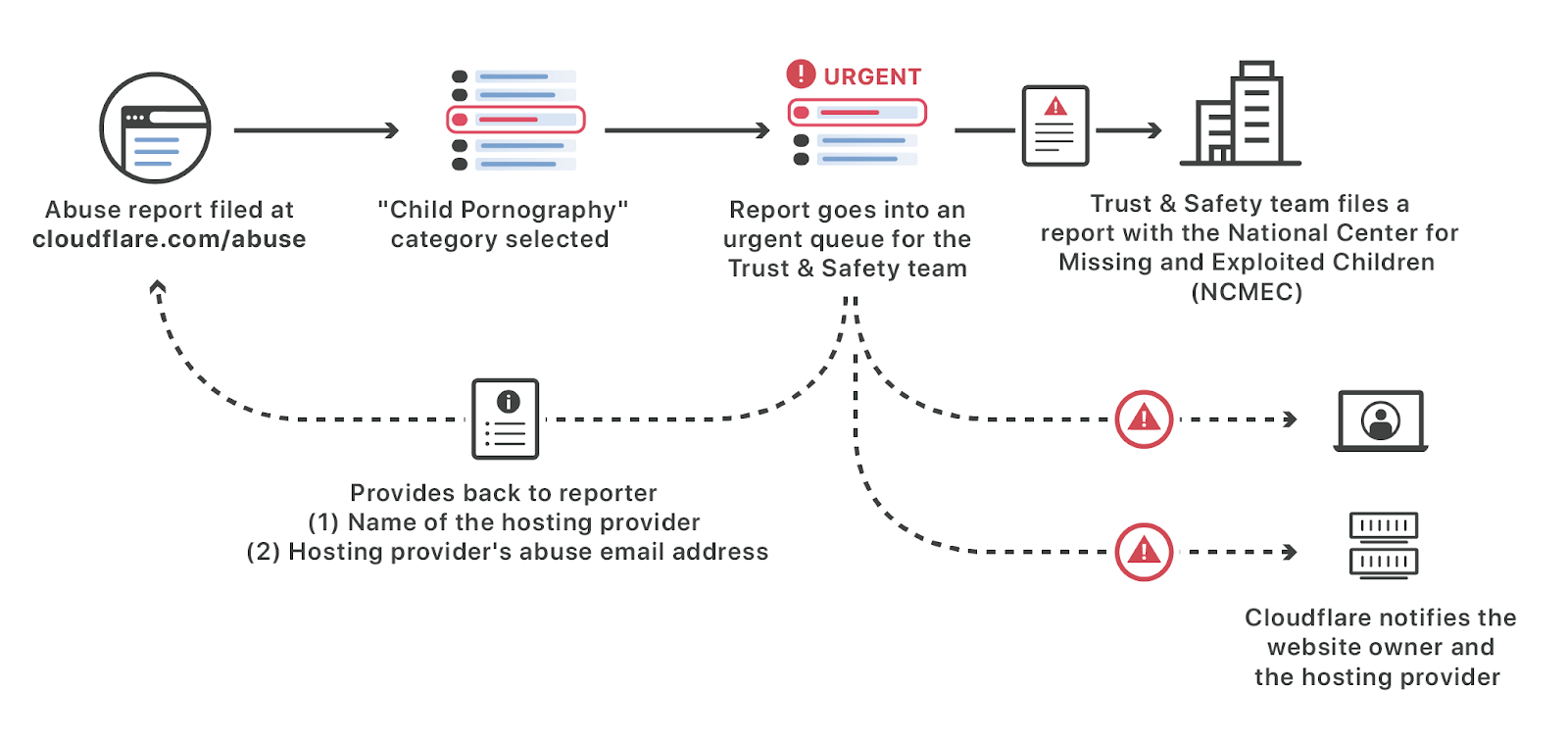

Previously, Cloudflare said that it has provided 5,208 reports to the National Center for Missing or Exploited Children (NCMEC) and removed 5,428 domains from its service to date.

While Cloudflare with its CSAM Scanning Tool is capable of identifying illegal content concerning child exploitation material, some websites were still up and running on Cloudflare's services, meaning that the tool couldn't catch them all.

This indicates that Cloudflare is struggling to keep those websites to ever use its services.

The company insists that it is powerless because it does not actually host the offending sites. What's more, campaigners say that Cloudflare's services make it easier for clients to avoid detection by "hiding" their locations.

Cloudflare lawyer Doug Kramer said that Cloudflare “worked hard to understand our role and what we could do to help, despite the fact that we don’t host content and aren’t in a position to remove content from the internet.”

Previously. the company is also under fire after it was accused for inadvertently helping extremists spread their hate and propaganda, and it was caught providing its services to 8chan, an internet forum used to celebrate mass shootings and spread so-called "manifestos".

As for the latter, 8chan in losing Cloudflare's protection made it vulnerable to a DDoS attack, where its website was bombarded with traffic that overwhelmed its servers, rendering it inaccessible. This happened within minutes after Cloudflare's service was withdrawn.