Internet tech companies are used to keep their secrets to themselves. They tend to hide the ingredients that power the magic behind the curtain. But as the internet grows, internet companies are changing. When resources aren't anymore the main way for thrive, scaling is.

Internet tech companies are used to keep their secrets to themselves. They tend to hide the ingredients that power the magic behind the curtain. But as the internet grows, internet companies are changing. When resources aren't anymore the main way for thrive, scaling is.

In terms of AI, deep learning is one of the main ingredients that power some of the tech companies' magic. Deep learning has been used to identify images, recognizing spoken images, translating languages, personalizing user-experience and many more. While AI and its deep learning capabilities are certainly the "brain" behind some big tech companies, when scaling is getting more crucial, they need to unveil the secrets to the world to do so.

Facebook on December 10th, 2015, announced that it's opening its computer server designs to the open-source in attempt to run the latest AI algorithms.

Beside Facebook, the other big player is Google. The search giant Google has open-sourced TensorFlow so anyone can use it. Facebook is commencing a similar strategy and it's also releasing its AI design for everyone to use.

Google's AI, TensorFlow, is pretty much what's behind the company's products. From Search to Maps, its AI helps the company in recognizing inputs and commands. Facebook's Ai on the other hand, helps its DeepFace face recognition ability, choosing the most relevant content to News Feed, deliver answers to M digital assistant and more.

By carrying its AI to the public, both companies are trying to figure out how people are engaging their services, and to refine how deep learning can help humans by conducting real-life conversation using common sense.

While the AI can run on traditional processors, Facebook Google and Baidu have found that their neural networks are far more efficient if they shift much of the computation onto GPUs.

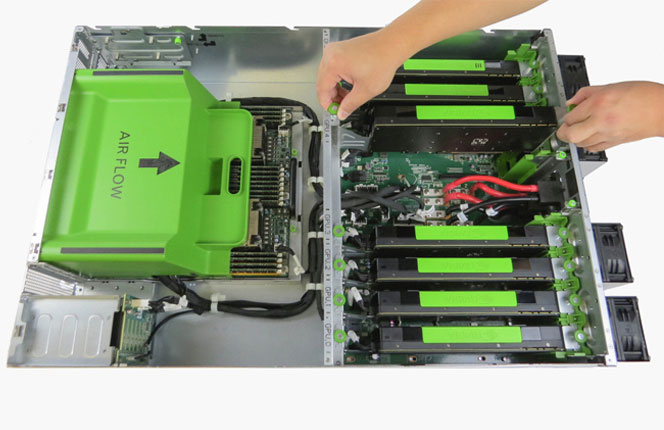

With a codename Big Sur, Facebook's machine is packed with eight GPUs (graphics processing unit) boards with each loaded with dozens of chips consuming only about 300W of power. While these chips were originally designed to render images for computer games and other highly graphical applications, they've proven well in adapting to deep learning. Since then, the chips are used for deep learning by using neural networks that consist of vast networks of machines that are similar to how the human's brain work.

The neural network on the AI works by "deep learning" things. It thrives on data, and it needs more than enough information so it can learn to recognize photos and do what it has to do. With GPUs, these networks can analyze data more quickly if compared to when it ran on traditional processors. In general, GPUs give more computational throughput per dollar than ordinary CPUs.

Facebook designed the machine in tandem with Quanta, a Taiwanese manufacturer, and Nvidia, a chip maker specializing in GPUs. Traditionally, businesses went straight to the more popular companies such as Dell, HP, and IBM for the servers. But Facebook and others have found that they can save more money if they're designing their systems in tandem with Asian manufacturers.

The development of Big Sur has passed 18 months. Since then, the system that is leveraging Nvidia's Tesla M40 Accelerated Computing Platform and a wide range of PCI-e cards, is twice as fast as the previous system Facebook used to train its neural networks.

Tech in Giving Away Secrets

While secrets will remain a secret if preserved and hidden, tech companies are approaching their goals the other way around. It seems odd at first to see them revealing the technologies behind their empire, but by doing this, they believe that they can accelerate their work on inventing new breakthroughs.

"Although machine learning (ML) and artificial intelligence (AI) have been around for decades, most of the recent advances in these fields have been enabled by two trends: larger publicly available research data sets and the availability of more powerful computers - specifically ones powered by GPUs. Most of the major advances in these areas move forward in lockstep with our computational ability, as faster hardware and software allow us to explore deeper and more complex systems," said Facebook

By giving the project as an open-source, the AI can be used and developed by many people. From ordinary end-users to other companies. To researchers to scientists, even to competitors themselves. While this may hurt both Google's and Facebook's business, their aim is not resources or the ability to compete using their home-baked recipe. But to scale up, and creating new ideas that what was once limited.

The open-sourced project will open the possibility of communities and researchers to improve them. Google and Facebook can develop and scale up by themselves, but by having people to work on the same project, the impact will be much more significant.

"There is a network effect. The platform becomes better as more people use it," said Yann LeCun, a founding father of deep learning. "The more people that rally to a particular platform or standard, the better it becomes - the more people contribute."

On Facebook's side, the company is already a social giant and one of the most widely-used service on the web. Giving its AI for others to use will favor both Facebook and the people who are using it, providing an added leverage in recruitment and retaining talents.

"Our commitment to open-source is something that individuals who work here are passionate about," said Serkan Piantino, an Engineering Director in Facebook's AI group. "Having that be a part of our culture is a benefit when it comes to hiring."

Open-Source: The New Currency

Microsoft and Apple were the companies famous for sticking to their principals for keeping secret ingredients secret. While Microsoft slowly changes to meet more of the current demand by opening itself to the flexibility of the open-source world, on the internet, especially those of the largest service, open-source is already their main source of service.

Open-source is the currency of developers. The more projects that are available for free for them to use, the development of other projects that are built based by it, will be more flexible and much more easier.

Open-source is like how people are sharing their thoughts and ideas. In the closed-source world, developers have little to no space to improve. As tech companies are shifting their projects and making themselves open-sourced, they're breeding more streamlined and better-suited operations.

Facebook began sharing important software in 2011 when the company started sharing its hardware designs with Open Compute Project (OCP). In January 2015, the company continued the attempt by offering its AI tools as an open-source.

As Facebook grew its dominance on the web, the only service that is on par, if not exceeding it, is Google. Since Google is already venturing to open-source its AI, Facebook is doing the same thing to compete.

Google open-sourcing TensorFlow has gained some big headlines. To continue attracting the big talent, Facebook must keep pace in the game.

Since deep learning and its GPUs hunger for data, open-sourcing Big Sur is giving Facebook a huge benefit. On one side, the development pace will speed up because of the more contributors are lending their hands. The next is because the more people are using it, the more data is processed, increasing the potential of deep learning. And the last is to save resources because as more companies are using the design, manufacturers can build the machine at a lower cost.

On the larger picture, it will accelerate the evolution of deep learning as a whole - including software and the hardware industry.

Not only Facebook is starting to reveal its hardware-software secrets. Many other companies are also sharing their hardware designs as well.

Further reading: Google and Facebook: When Computers Dream, Humans Are Fooled