Packing more data in an increasingly smaller space has been the goal of many computer scientists.

Programmers and researchers are always brainstorming to improve existing standards, or create new ones for better standards without compromising quality. One of which, is the development of digital image compression to get better image quality at a lower size.

Earlier this April, researchers from The University of Texas at Austin, Sheng Cao, Chao-Yuan Wu, and Philipp Krahenbuhl, published a paper to propose a method of using super-resolution.

This approach is to enhance the quality of a low-resolution image through different techniques.

The idea is not to lose quality while increasing the resolution, and to get lossless compression of images.

The researchers called the method 'Super-Resolution based Compression', or SReC.

"We propose Super-Resolution based Compression (SReC), which relies on multiple levels of lossless super-resolution. We show that lossless super-resolution operators are efficient to store, due to the natural constraints induced by the super-resolution setting."

Lossless image compression is where a method of compression manages to reduce the size of an image without sacrificing data or details.

What this means, the image has no loss in quality when compressed.

PNG image files for example, is lossless.

The researchers here suggested a model that uses super-resolution to achieve compression of a high-resolution image. To do that, the model must first store a low-resolution copy of an input image as raw pixels. After that, it applies three iterations of losslessly compressing super-resolution models to produce an output image of lower size.

This way, the method can encode a low-resolution image efficiently, before leveraging the super-resolution models to effectively reduce the size of high-resolution image.

To calculate the effectiveness of this SReC compression method, the researchers use a measurement called the 'bit per sub-pixel' (bpsp).

Because image compression effectiveness is hard to calculate using megabytes, the researchers use bpsp that takes into account the bits that are storing sub-pixels for an image format.

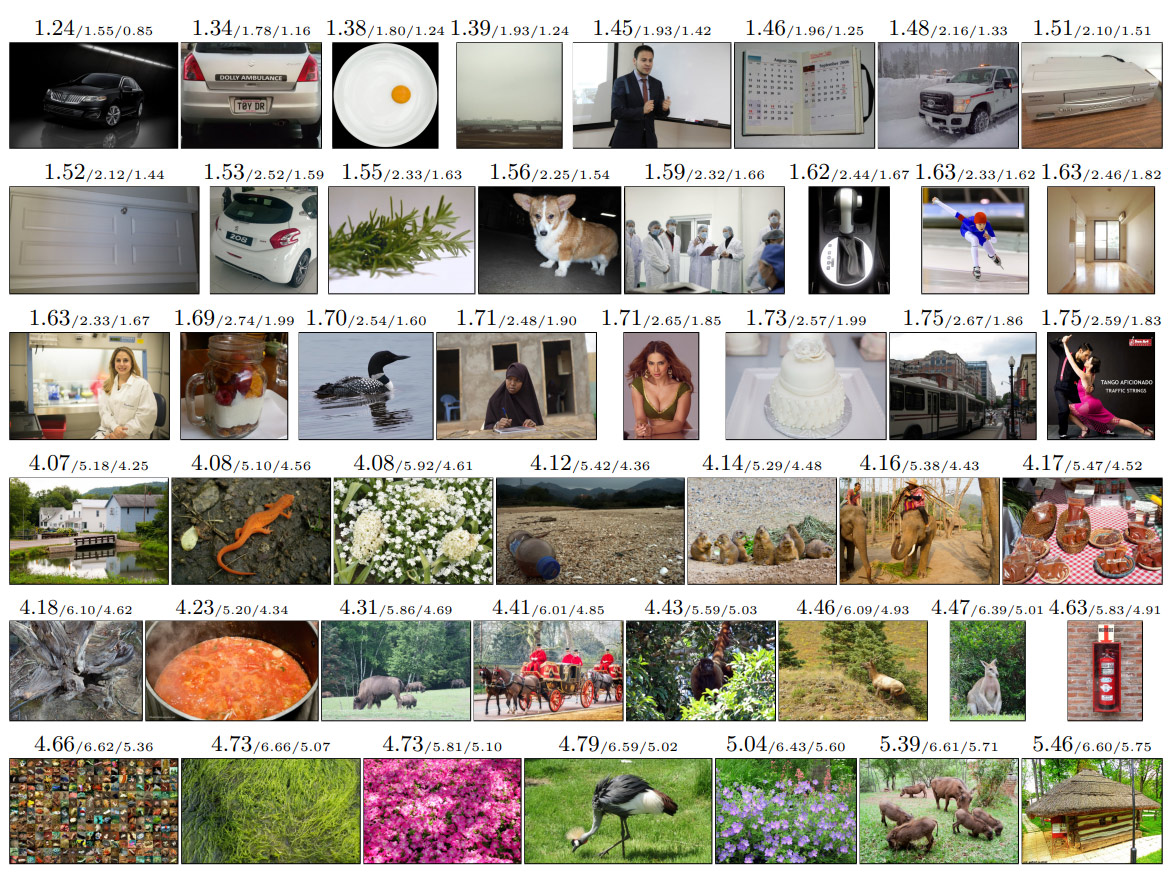

According to the researchers the SReC model achives a bpsp of 2.70 when applied on the Open Images dataset. In comparison, a RAW image in BMP format has a bpsp of 8.

While SReC can achieve what many other formats cannot do, Cao said that the model isn't set to replace any of the popular existing standards. However, the model can be used in a server-side computation to help reduce load time for websites, like what Google did with RAISR on Google+.

But for a broader implementation on websites, SReC needs to compete with Google's popular WebP model.

In other words, SReC may not be practical in real-world scenarios because it isn't introducing groundbreaking achievements.

SReC can sometimes compress better than other formats, while performing worse on certain type of images. But the researchers noted that SReC obtains larger performance gain on more challenging (less compressible) cases.

"This suggests that our network effectively models complicated image patterns that hand-engineered methods struggle at," the researchers said

Earlier this 2020, the Joint Photographic Experts Group (JPEG), the committee that maintains various JPEG image-related standards, announced a call for papers to form a new image codec.

At the time, the committee said that it wants to start using AI and blockchain technology to create future standards.

Related: Google Releases Guetzli, An Open Source Project To Make JPEG Images A Lot Smaller In Size